function (train, test = NULL, y, k = 3, algorithm = c("kd_tree",

"cover_tree", "brute"))

NULLSTA 9890 - Fundamentals of ML: II. Linear Algebra Foundations

\[\newcommand{\E}{\mathbb{E}} \newcommand{\R}{\mathbb{R}} \newcommand{\bx}{\mathbf{x}}\newcommand{\bbeta}{\mathbf{\beta}} \newcommand{\bX}{\mathbf{X}} \newcommand{\by}{\mathbf{y}} \newcommand{\bz}{\mathbf{z}} \newcommand{\bA}{\mathbf{A}} \newcommand{\bb}{\mathbf{b}} \newcommand{\bc}{\mathbf{c}} \newcommand{\bH}{\mathbf{H}} \newcommand{\bI}{\mathbf{I}}\]

Linear Algebra Review

In mathematics, a vector - random or otherwise - is a fixed-length ordered collection of numbers. When we want to be precise about the size of a vector, we often call it a “tuple”, e.g., a length-three vector is a “triple”, a length-four vector is a “4-tuple”, a length-five vector is a “5-tuple” etc..

So, these are all vectors:

- \((3, 4)\)

- \((1, 1, 1)\)

- \((1, 5, 6, 10)\)

When we want to talk about the set of vectors of a given size, we use the Cartesian product of sets. For two sets, \(A, B\), the product set \(A \times B\) is the set of all pairs, with the first element from \(A\) and the second from \(B\). In mathematical notation,

\[A \times B = \left\{(a, b): a \in A, b \in B\right\} \]

This set-builder notation is read as follows: “The Cartesian Product of \(A\) and \(B\) is the set of all pairs \((a, b)\) such that \(a\) is in \(A\) and \(b\) is in \(B\).”

If \(A\) and \(B\) are the same set, we define a Cartesian power as follows:

\[A^2 = A \times A = \left\{(a_1, a_2): a_1 \in A, a_2 \in A\right\}\]

Note that even though the sets \(A\) and \(A\) in this product are the same, the elements in each pair may vary. For example, if \(A = \{1, 2, 3\}\), we have

\[A^2 = \left\{(1, 1), (1, 2), (1, 3), (2, 1), (2, 2), (2, 3), (3, 1), (3,2), (3, 3)\right\}\]

Note that vectors are ordered pairs so \((2, 1) \neq (1, 2)\). From here, it should be pretty easy to convince yourself that set sizes play nicely with Cartesian products:

- \(|A \times B| = |A| |B|\)

- \(|A^k| = |A|^k\)

The most common set of vectors we use are those where each element is an arbitrary real number. The set of vectors of length \(n\) (\(n\)-tuples) is thus \(\R^n\). We rarely mix vectors of different lengths, so we don’t really have a name or notation for the “combo pack” \(\R^2 \cup \R^3 \cup \R^4\).

Conventionally, vectors are written in bold (if on a computer) or with a little arrow on top (hand written): so a vector called “x” would be denoted \(\mathbf{x}\) or \(\vec{x}\). The elements of \(\bx\) are denoted by subscripts \(\bx = (x_1, x_2, \dots, x_n)\).

Vector Arithmetic

We have three arithmetic operations we can perform on general vectors. The simplest is scalar multiplication. A scalar is a non-vector number, i.e., a ‘regular’ number. Scalar multiplication consists of applying the scalar independently to each element of a vector.

\[\alpha \bx = \alpha(x_1, x_2, \dots, x_n) = (\alpha x_1, \alpha x_2, \dots, \alpha x_n)\]

For example, if \(\bx = (3, 4)\) and \(\alpha = 2\), we have \[\alpha \bx = (6, 8)\]

Note that the output of scalar multiplication is always a vector of the same length as the input.

We also have the ability to add vectors. This again is performed element-wise.

\[\bx + \by = (x_1, \dots, x_n) + (y_1, \dots, y_n) = (x_1 + y_1, \dots, x_n + y_n) \]

Note that we can’t add vectors of different lengths (recall our “no mixing” rule) and the output length is always the same as the input lengths.

Finally, we have the vector inner product, defined as:

\[\langle \bx, \by \rangle = x_1y_1 + x_2y_2 + \dots + x_ny_n \]

You might have seen this previously as the “dot” product. The inner product takes two length-\(n\) vectors and gives back a scalar. This structure might seem a bit funny, but as we’ll see below, it’s actually quite useful.

You might ask if there’s a “vector-out” product: there is one, with the fancy name “Hadamard product”, but it doesn’t play nicely with other tools, so we don’t use it very much.

These tools play nicely together:

- \(\alpha(\bx + \by) = \alpha \bx + \alpha \by\) (Distributive)

- \(\langle \alpha \bx, \by \rangle = \alpha \langle \bx, \by \rangle\) (Associative)

- \(\langle \bx, \by \rangle = \langle \by, \bx \rangle\) (Commutative)

Vector Length and Angle

We sometimes want to think about the “size” of a vector, analogous to the absolute value of a scalar. In scalar-world, we say “drop the sign” but there’s not an obvious analogue to a sign for a vector. For instance, if \(\bx = (3, -4)\) is \(\bx\) “positive”, “negative” or somewhere in beetween?

We note a trick from scalar-land: \(|x| = \sqrt{x^2}\). We can use the same idea for vectors:

\[ \|\bx\| = \sqrt{\langle \bx, \bx\rangle} = \sqrt{\sum_{i=1}^n x_i^2}\]

This quantity, \(\|\bx\|\), is called the norm or length of a vector. We use the double bars to distinguish it from the absolute value of a scalar, but it’s fundamentally the same idea.

In \(\R^2\), we recognize this formula for length as the Pythagorean theorem:

\[ \|(3, 4)\| = \sqrt{3^2 + 4^2} = \sqrt{25} = 5 \]

We also sometimes want to define the angle between two vectors. We can define this as:

\[ \cos \angle(\bx, \by) = \frac{\langle \bx, \by\rangle}{\|\bx\|\|\by\|} \Leftrightarrow \angle(\bx, \by) = \cos^{-1}\left(\frac{\langle \bx, \by\rangle}{\|\bx\|\|\by\|}\right)\]

We won’t use this formula too often for implementation, but it’s good to have it for intuition. In particular, we note that angle is a proxy for sample correlation, justifying the common vernacular of “orthogonal”, meaning “at right angles”, for “uncorrelated” or “unrelated.”

Matrices

An \(n\)-by-\(p\) array of numbers is called a matrix; here the first dimension is the number of rows while the second is the number of columns. So \[\bA = \begin{pmatrix} 1 & 2 \\ 3 & 4 \\ 5 & 6 \end{pmatrix}\] is a 3-by-2 matrix. We denote the set of matrices of a given size as \(\R^{n \times p}\), extending slightly the notation we use for vectors.

In this course, we will use matrices for two closely-related reasons:

- To organize our data

- To specify and manipulate linear models

Specifically, if we have \(n\) training samples, each of \(p\) covariates (predictors), we will arrange them in a matrix traditionally called \(\bX \in \R^{n \times p}\). Here, each row of \(\bX\) corresponds to an observation. Statisticians tend to call this matrix a design matrix because (historically) it was something designed as part of an experiment; the name got carried forward into the observational (un-designed) setting. You may also hear it called the ‘data matrix’.

Suppose we have a design matrix \(\bX \in \R^{n \times p}\) and a vector of regression coefficients \(\mathbf{\beta} \in \R^p\). We can use matrix multiplication to make predictions about all observations simultaneously.

Specifically, recall that the standard (multivariate) linear model looks like:

\[\hat{y} = \sum_{i=1}^p x_i\beta_i\]

For a single observation, this can be written in vector notation as

\[\hat{y} = \bx^{\top}\bbeta\]

If we have \(n\) observations, we can stack them in a vector as:

\[\begin{pmatrix} \hat{y}_1 \\ \hat{y}_2 \\ \vdots \\ \hat{y}_n \end{pmatrix} = \begin{pmatrix} \bx_1^{\top}\bbeta \\ \bx_2^{\top}\bbeta \\ \vdots \\ \\bx_n^{\top}\bbeta \end{pmatrix}\]

We can connect this with our design matrix \(\bX\) by using the above as a definition of matrix-vector multiplication:

\[\hat{\by} = \bX\bbeta = \begin{pmatrix} \bx_1^{\top}\bbeta \\ \bx_2^{\top}\bbeta \\ \vdots \\ \\bx_n^{\top}\bbeta \end{pmatrix}\]

Here, matrix multiplication proceeds by taking the inner product of each row with the (column) vector \(\bbeta\). This may feel a bit unnatural at first, but with a bit of practice, it will become second nature. Note that we don’t need a transpose on \(\bX\): the multiplication ‘auto-transposes’ in some sense.

It is always helpful to keep track of dimensions when doing matrix multiplication: checking that matrices and vectors have the right size is a useful way to make sure you haven’t done anything too wrong. In general, we can only multiply a \(m\)-by-\(n\) matrix with a \(n\)-by-\(p\) matrix and the result is a \(m\)-by-\(p\) matrix (the \(n\)-dimension gets reduced to a scalar by the inner product). Formally, we have something like

\[ \R^{m \times n} \times \R^{n \times p} \to \R^{m \times p}\]

For purposes of this, you can always think of an \(n\)-vector as a \(n\)-by-1 “column” matrix, giving us:

\[\underbrace{\bX}_{\R^{n \times p}} \underbrace{\bbeta}_{\R^p} = \underbrace{\hat{\by}}_{\R^{n \times 1}}\]

Spectral Properties of Symmetric Matrices

An important class of matrices we will consider are symmetric matrices, which are just what the name sounds like. These come up in several key places in statistics, none more important than the covariance matrix, typically denoted \(\Sigma\). Recall that the covariance operator \(\mathbb{C}\) is symmetric (\(\mathbb{C}[X, Y] = \mathbb{C}[Y, X]\)) so the covariance matrix of a random vector turns out to be symmetric as well.

Another common source of symmetric matrices is when a matrix is multiplied by its transpose: you should convince yourself that \(\bX^{\top}\bX \in \R^{p \times p}\) is a symmetric matrix.

Ordinary Least Squares

Suppose we want to fit a linear model to a given data set (for any of the reasons we discuss in more detail below): how can we choose which line to fit? (There are infinitely many!)

Since our goal is minimizing test error, and we hope training error is at least a somewhat helpful proxy for training error, we can pick the line that minimizes training error. To do this, we need to commit to a specific measure of error. As the name Ordinary Least Squares suggests, OLS uses (mean) squared error as its target.

Why is MSE the right choice here? It turns out that MSE is very nice computationally, but the reason is actually more fundamental: given a random variable \(Z\), suppose we want to minimize the \((Z - \mu)\) for some \(\mu\): it can be shown that

\[ \E[Z] = \text{argmin}_{\mu \in \R} \E[(Z - \mu)^2]\]

Prove this for yourself.

Hint: Differentiate with respect to \(\mu\).

That is, the quantity that minimizes the MSE is the mean. So when we fit a line to some data by OLS, we are implicitly trying to fit to \(\E[y]\) - a very reasonable thing to do!

Specifically, given training data \(\mathcal{D} = \{(\bx_i, y_i)\}_{i=1}^n\) where each \(\bx_i \in \R^p\), OLS finds \(\bbeta \in \R^p\) such that \[ \hat{\bbeta} = \text{argmin}_{\bbeta \in \R^p} \frac{1}{n}\sum_{i=1}^n \left(y_i - \sum_{j=1}^p \beta_jx_j\right)^2 = \text{argmin}_{\bbeta \in \R^p} \frac{1}{n}\sum_{i=1}^n \left(y_i - \bx^{\top}\bbeta\right)^2\]

Some possibly new notation here:

\(\bx^{\top}\bbeta\) is the (inner) product of two vectors: defined as the sum of their elementwise products.

-

Optimization problems:

- \[\hat{x} = \text{argmin}_{x \in \mathcal{C}} f(x)\]

- \[f_* = \text{min}_{x \in \mathcal{C}} f(x)\]

These problems say: find the value of \(x\) in the set \(\mathcal{C}\) that minimizes the function \(f\). \(\text{argmin}\) says ‘give me the minimizer’ while \(\min\) says give me the minimum value’. These are related by \(f_* = f(\hat{x})\)

The function \(f\) is called the objective; the set \(\mathcal{C}\) is called the constraint set.

Ordinary Least Squares refers to the use of an MSE objective without any additional constraints.

Note the general structure of our approach here:

- Define the loss we care about

- Set up an optimization problem to minimize loss on the training set

- Solve optimization problem

ML folk call this empirical risk minimization (ERM) since we’re minimizing the risk (average loss) on the data we can see (the training data). Statisticians call this \(M\)-estimation, since it defines an estimator by Minimization of a measure of ‘fit’. Whatever you call it, it’s a very useful ‘meta-method’ for coming up with ML methods.

How does this compare with Maximum Likelihood Estimation?

-

We set up this ERM method using mean squared error - what happens with other errors? Specifically, formulate this ERM for

- mean absolute error

- mean percent error

and compare to OLS.

So far we’ve set up OLS as \[ \hat{\bbeta} = \text{argmin}_{\bbeta \in \R^p} \frac{1}{n}\sum_{i=1}^n \left(y_i - \bx^{\top}\bbeta\right)^2\]

We can clean this up to make additional analysis easier:

- Let \[\by = \begin{pmatrix} y_1 \\ y_2 \\ \vdots \\ y_n \end{pmatrix}\] be the (vertically stacked) vector of responses.

- Next look at our predictions: \[\hat{\by} = \begin{pmatrix} \bx_1^{\top}\bbeta \\ \bx_2^{\top}\bbeta \\ \vdots \\ \bx_n^{\top}\bbeta \end{pmatrix} = \begin{pmatrix} \bx_1^{\top} \\ \bx_2^{\top} \\ \vdots \\ \bx_n^{\top} \end{pmatrix}\bbeta = \bX\bbeta\]

Hence, OLS is just \[\hat{\bbeta} = \text{argmin}_{\bbeta \in \R^p} \frac{1}{n} \|\by - \bX\bbeta\|_2^2\] Here \(\|\cdot\|_2^2\) is the (squared Euclidean or \(L_2\)) norm of a vector: defined by \(\|\bz\|_2^2 = \sum z_i^2\)

Do we actually need the \(1/n\) part?

Why is this called linear?

We’ve formulated OLS as \[\hat{\beta} = \text{argmin}_{\bbeta \in \R^p} \frac{1}{n} \|\by - \bX\bbeta\|_2^2.\] In order to solve this, it will be useful to modify it slightly to \[\hat{\beta} = \text{argmin}_{\bbeta \in \R^p} \frac{1}{2} \|\by - \bX\bbeta\|_2^2\]

Why is this ok to do?

You will show in Report #01 that the solution is given by

\[\hat{\beta} = (\bX^{\top}\bX)^{-1}\bX^{\top}\by\]

Prove this for yourself. What conditions are required on \(\bX\) for the inverse in the above expression to exist?

With this estimate, our in-sample predictions are given by:

\[\hat{\by} = \bX\hat{\bbeta} = \bX(\bX^{\top}\bX)^{-1}\bX^{\top}\by \]

This says that our predictions are, in some sense, just a linear function of the original data \(\by\). The matrix

\[\bX(\bX^{\top}\bX)^{-1}\bX^{\top} = \bH\]

is sometimes called the ‘hat’ matrix because it puts a hat on \(\by\) (\(\hat{\by}=\bH\by\)).

\(\bH\) can be shown to be a special type of matrix called a projector, meaning that it has eigenvalues all 0 or 1. For the hat matrix specifically, we can show that eigenvalues of \(\bH\) are \(p\) zeros and \(n - p\) ones.

This in turn implies that it is idempotent, meaning \(\bH^2 = \bH\bH = \bH\). (To show this, simply express \(\bH\) in terms of its eigendecomposition.) We can use this property to finally justify the in-sample MSE of OLS we have cited several times.

The in-sample MSE is given by:

\[\begin{align*} \text{MSE} &= \frac{1}{n}\|\by - \hat{\by}\|_2^2 \\ &= \frac{1}{n}\left\|\by - \bH\by\right\|_2^2 \\ &= \frac{1}{n}(\by - \bH\by)^{\top}(\by - \bH\by) \\ &= \frac{1}{n}(\by^{\top} - \by^{\top}\bH)(\by - \bH\by) \\ &= \frac{\by^{\top}\by - \by^{\top}\bH\by - \by^{\top}\bH\by + \by^{\top}\bH\bH\by}{n} \\ &= \frac{\by^{\top}\by - \by^{\top}\bH\by}{n} \\ &= \frac{\by^{\top}(\bI - \bH)\by}{n} \\ \implies \E[\text{MSE}] &= \E\left[\frac{\by^{\top}(\bI - \bH)\by}{n}\right] \\ &= \frac{1}{n}\E\left[\by^{\top}(\bI - \bH)\by\right] \end{align*}\]

To finish this, we need to know that the expectation of a symmetric quadratic form satisfies

\[\bx \sim (\mu, \Sigma) \implies \E[\bx^{\top}\bA\bx] = \text{Tr}(\bA\Sigma) + \mu^{\top}\bA\mu\]

for any random vector \(\bx\) with mean \(\mu\) and covariance matrix \(\Sigma\).

To apply this above, we note \(\by \sim (\bX\beta_*, \sigma^2 \bI)\), so we get

\[\begin{align*} \E\left[\by^{\top}(\bI - \bH)\by\right] &= \text{Tr}((\bI - \bH) \sigma^2 \bI) + (\bX\bbeta_*)^{\top}(\bI - \bH)(\bX\bbeta_*) \\ &= \sigma^2 \text{Tr}(\bI - \bH) + \bbeta_*^{\top}\bX^{\top}(\bI - \bH)\bX\bbeta_* \\ &= \sigma^2 \text{Tr}(\bI - \bH) + \bbeta_*^{\top}(\bX^{\top}\bX - \bX^{\top}\bH\bX)\bbeta_* \\ &= \sigma^2 \text{Tr}(\bI - \bH) + \bbeta_*^{\top}(\bX^{\top}\bX - \bX^{\top}\bX(\bX^{\top}\bX)^{-1}\bX^{\top}\bX)\bbeta_* \\ &= \sigma^2 \text{Tr}(\bI - \bH) + \bbeta_*^{\top}(\bX^{\top}\bX - \bX^{\top}\bX)\bbeta_* \\ &= \sigma^2 \text{Tr}(\bI - \bH) + \bbeta_*^{\top} \mathbf{0}\bbeta_* \\ &= \sigma^2 \text{Tr}(\bI - \bH) \\ &= \sigma^2 (\text{Tr}(\bI) - \text{Tr}(\bH)) \end{align*}\]

Recall that the trace is simply the sum of the eigenvalues, so this last term becomes \(\sigma^2(n - p)\), finally giving us:

\[\E[\text{MSE}] = \frac{\sigma^2(n-p)}{n} = \sigma^2\left(1 - \frac{p}{n}\right)\]

Whew! That was a lot of work! But can you imagine how much more work this would have been without all of these matrix tools?

Make sure you can justify every step in the derivation above. This is a particularly long computation, but we will use the individual steps many more times in this course.

Bias-Variance Decomposition

In Report #01, you will show that, under MSE loss, our expected test error can be decomposed as

\[\text{MSE} = \text{Bias}^2 + \text{Variance} + \text{Irreducible Error}\]

Let’s show how we can analyze these quantities for a KNN regression problem. Here, we’re using the ‘regression’ version of KNN since it plays nicely with MSE.1

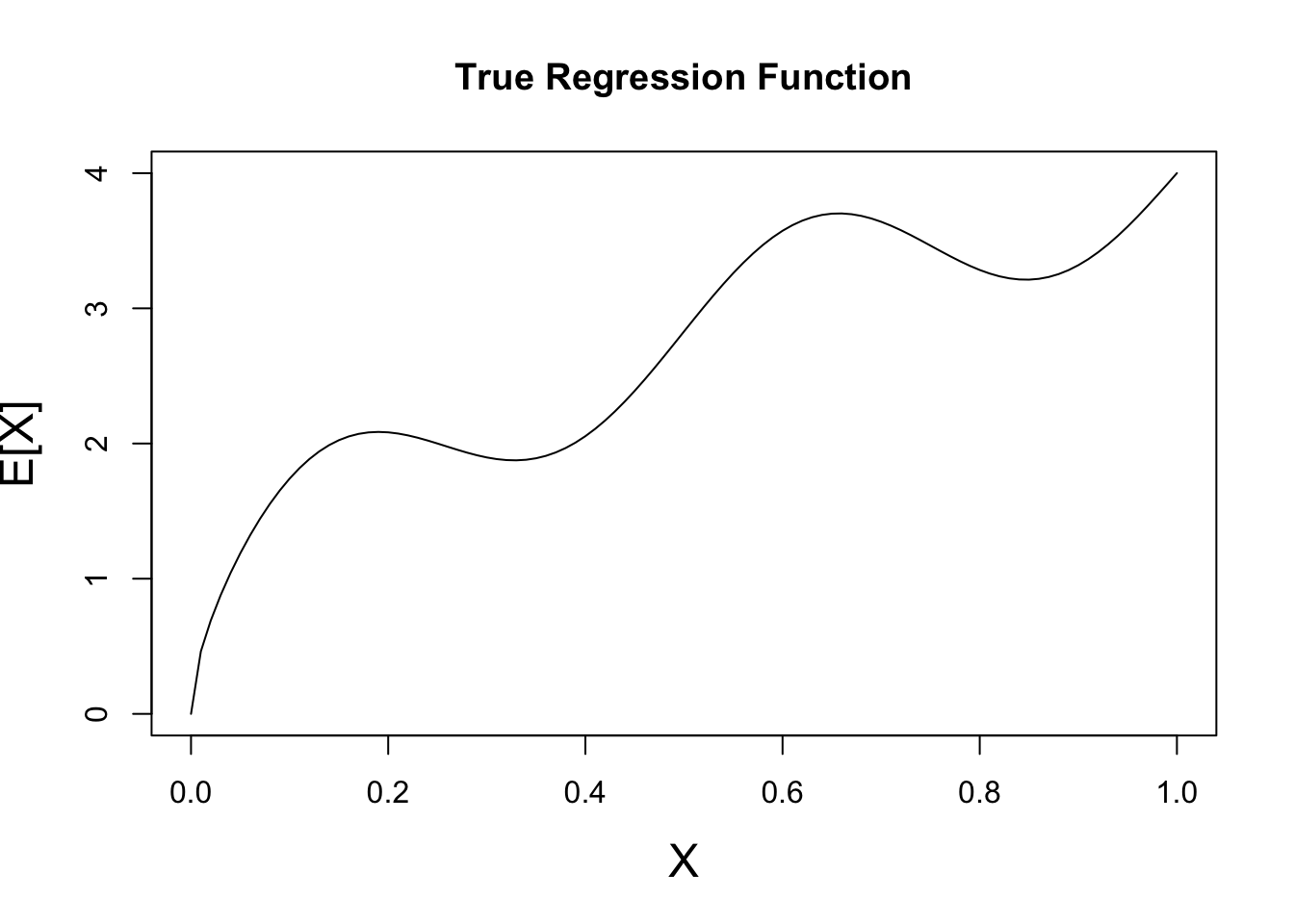

We also need a ‘true’ function which we’re trying to estimate. Let’s use the following model:

\[\begin{align*} X &\sim \mathcal{U}([0, 1]) \\ Y &\sim \mathcal{N}(4\sqrt{X} + 0.5 * \sin(4\pi * X), 0.25) \end{align*}\]

That is, \(X\) is uniform on the unit interval and \(Y\) is a non-linear function of \(X\) plus some Gaussian noise.

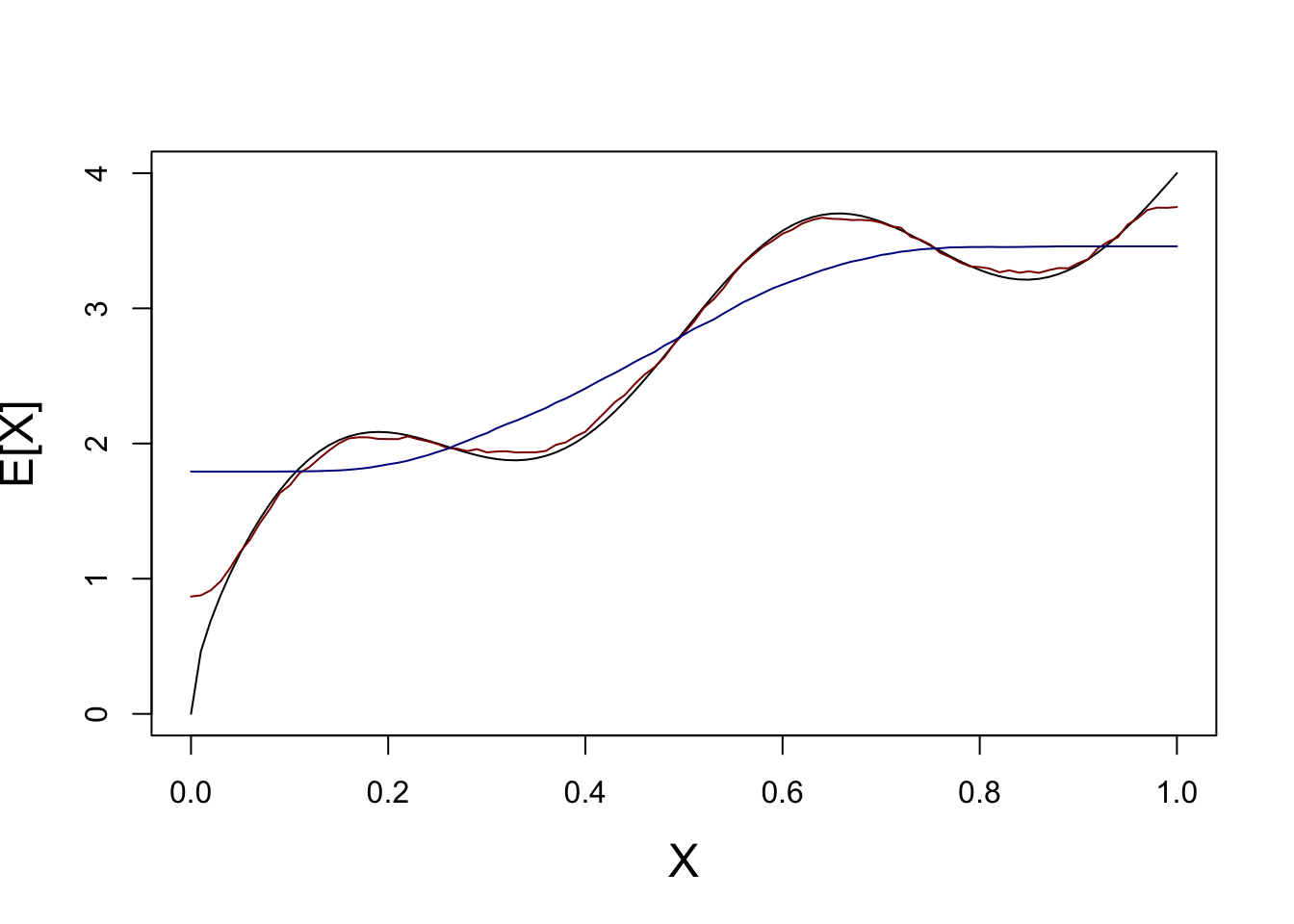

First let’s plot \(X\) vs \(\E[X]\) - under MSE loss this is our ‘best’ (Bayes-optimal) possible guess.

yfun <- function(x) 4 * sqrt(x) + 0.5 * sinpi(4 * x)

x <- seq(0, 1, length.out=101)

y_mean <- yfun(x)

plot(x, y_mean, type="l",

xlab="X", ylab="E[X]",

main="True Regression Function", cex.lab=1.5)

To generate training data from this model, we simply implement the PRNG components:

x_train <- runif(25, 0, 1) # 25 training points

y_train <- rnorm(25, mean=yfun(x_train), sd=0.5)

plot(x, y_mean, type="l",

xlab="X", ylab="E[X]",

cex.lab=1.5)

points(x_train, y_train)

We have some variance of course, but we can still “squint” to get the right shape of our function. Let’s see how KNN looks on this data.

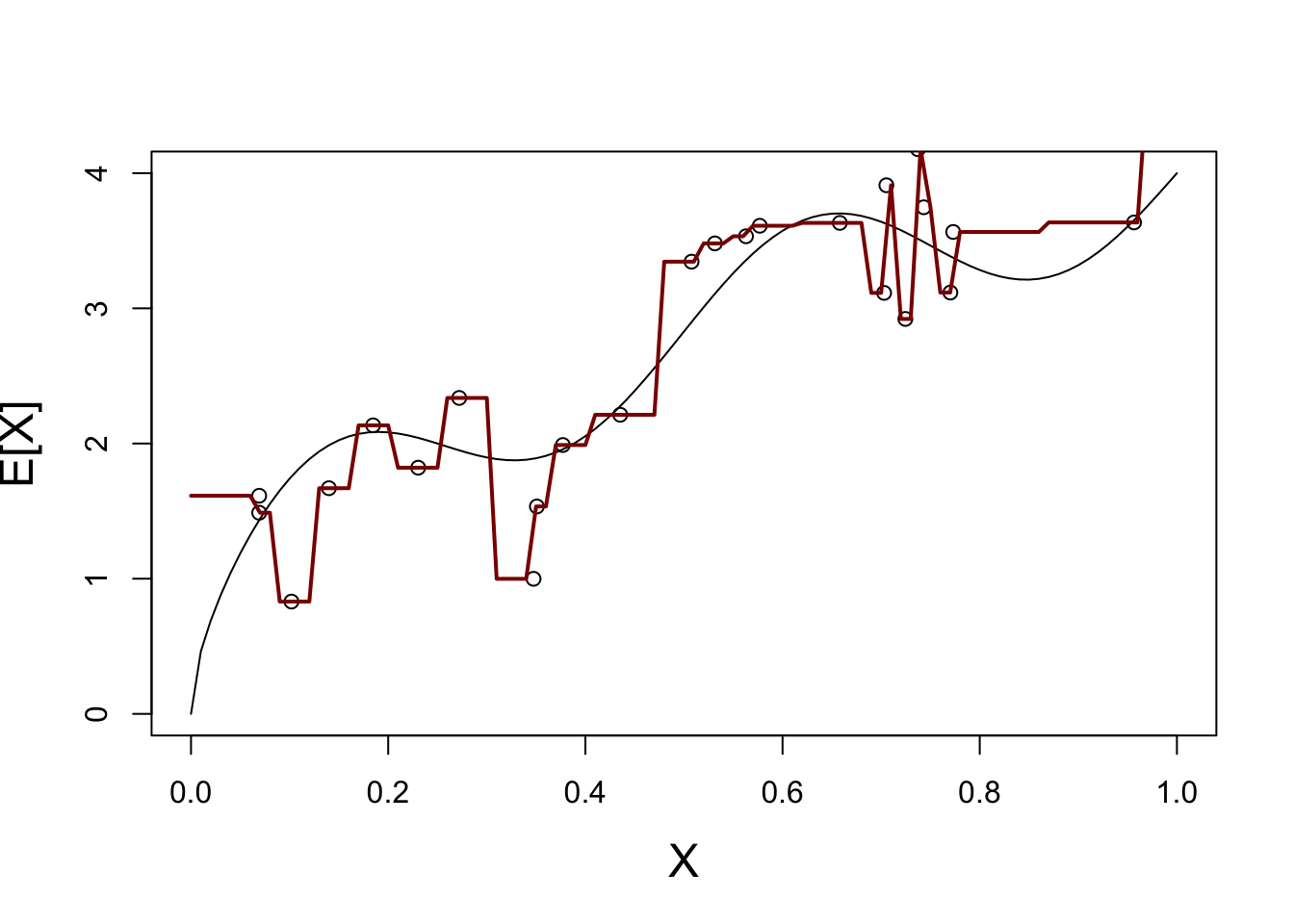

We start with \(K=1\):

X_train <- matrix(x_train, ncol=1)

plot_grid <- matrix(seq(0, 1, length.out=101), ncol=1)

Y_hat <- knn.reg(train=X_train, y=y_train, k=1, test=plot_grid)$pred

plot(x, y_mean, type="l",

xlab="X", ylab="E[X]",

cex.lab=1.5)

points(x_train, y_train)

lines(plot_grid, Y_hat, col="red4", lwd=2)

This is not a great fit - what happens if we repeat this process may times?

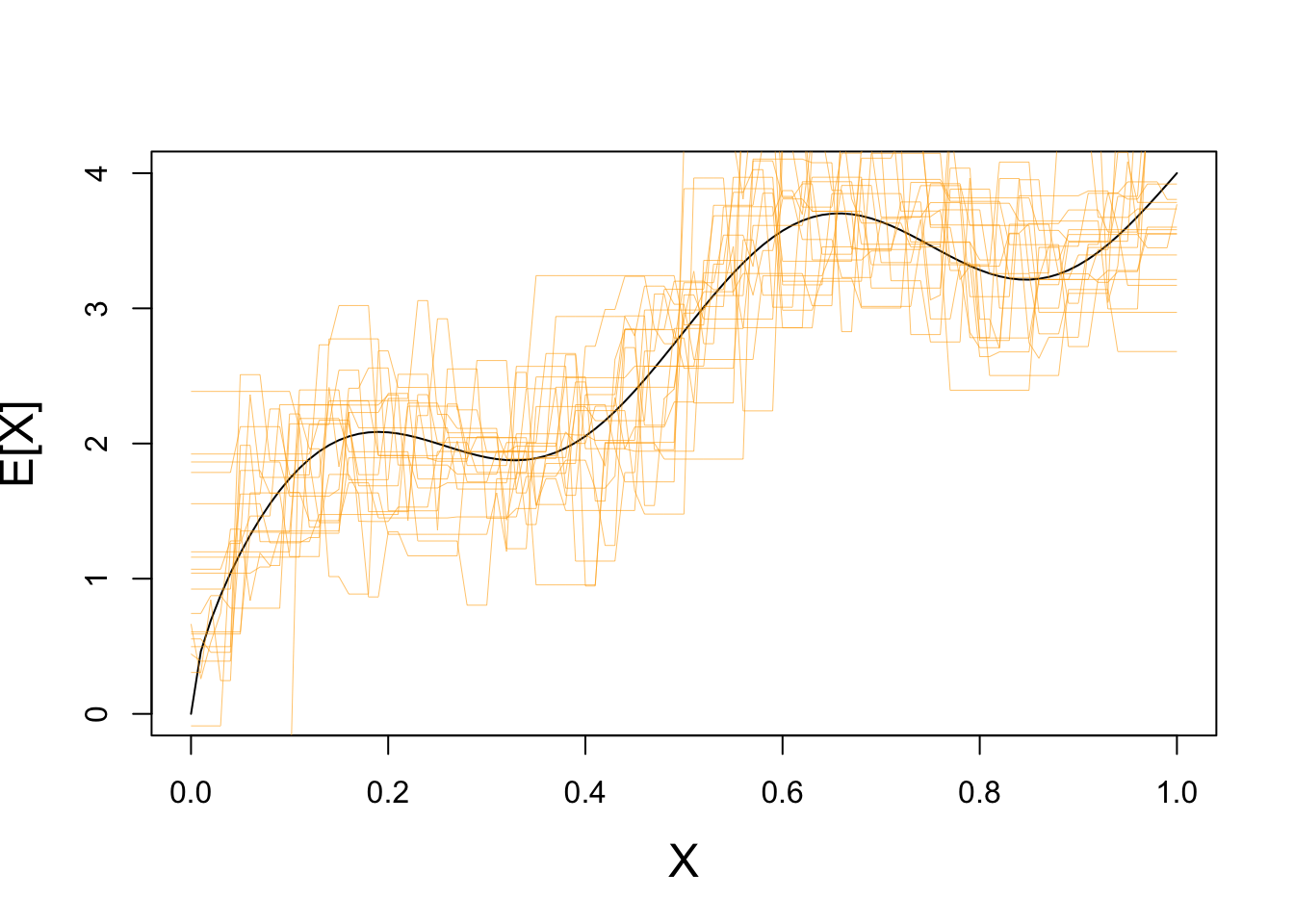

plot(x, y_mean, type="l",

xlab="X", ylab="E[X]",

cex.lab=1.5)

for(i in seq(1, 20)){

X_train <- matrix(runif(25, 0, 1), ncol=1)

y_train <- matrix(rnorm(25, mean=yfun(X_train), sd=0.5), ncol=1)

test_grid <- matrix(seq(0, 1, length.out=101), ncol=1)

Y_hat <- knn.reg(train=X_train, y=y_train, k=1, test=test_grid)$pred

lines(test_grid, Y_hat, col="#FFAA0099", lwd=0.5)

}

Clearly we have some variance!

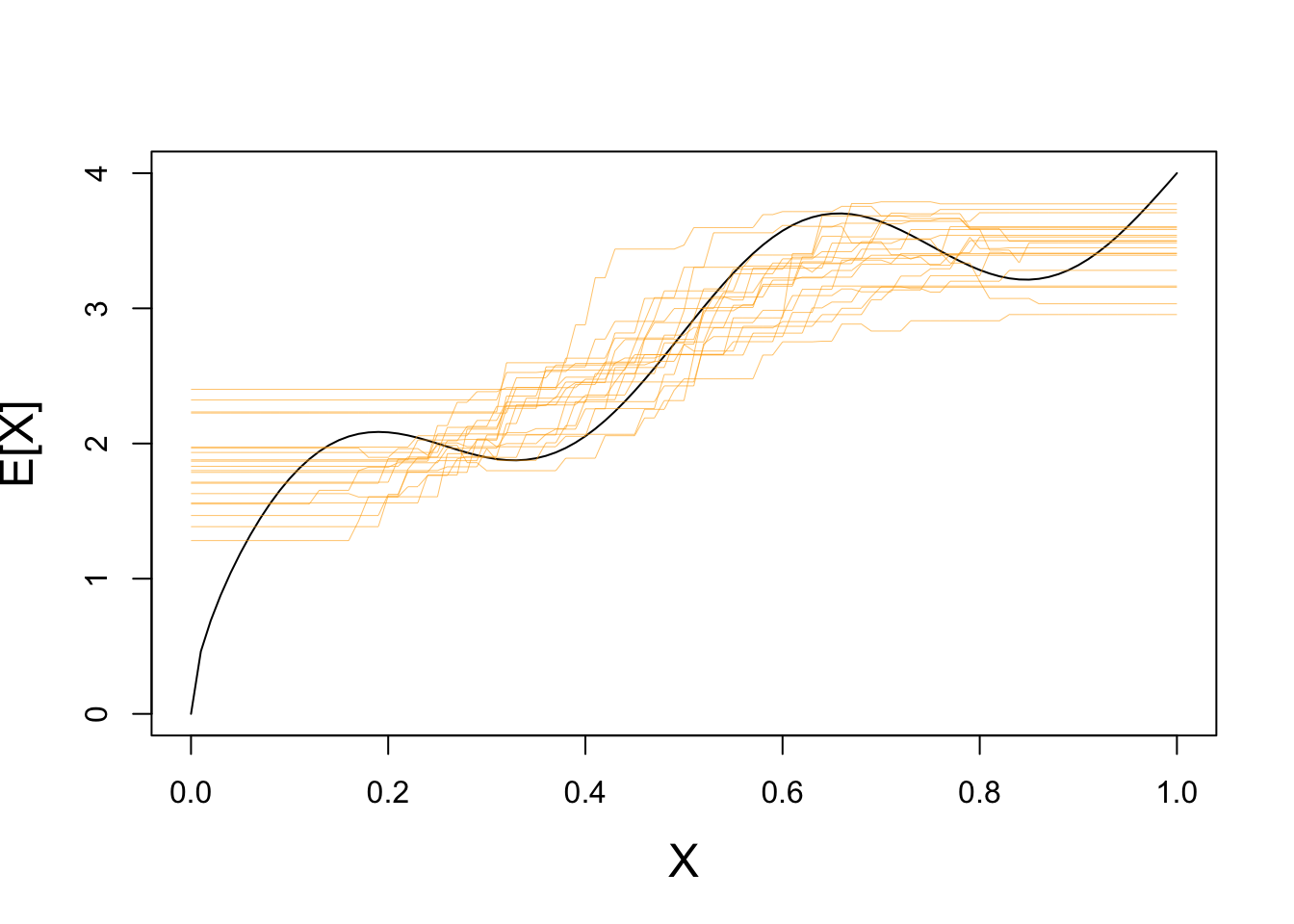

If we repeat with a higher value of \(K\), we see far less variance:

plot(x, y_mean, type="l",

xlab="X", ylab="E[X]",

cex.lab=1.5)

for(i in seq(1, 20)){

X_train <- matrix(runif(25, 0, 1), ncol=1)

y_train <- matrix(rnorm(25, mean=yfun(X_train), sd=0.5), ncol=1)

plot_grid <- matrix(seq(0, 1, length.out=101), ncol=1)

Y_hat <- knn.reg(train=X_train, y=y_train, k=10, test=test_grid)$pred

lines(test_grid, Y_hat, col="#FFAA0099", lwd=0.5)

}

How well does KNN do on average?

That is, if we could repeat this process (infinitely) many times, how well would it recover the true regression function? Let’s try \(K=1\) and \(K=10\):

plot(x, y_mean, type="l",

xlab="X", ylab="E[X]",

cex.lab=1.5)

KNN_AVERAGE_PRED_K1 <- rowMeans(replicate(500, {

X_train <- matrix(runif(25, 0, 1), ncol=1)

y_train <- matrix(rnorm(25, mean=yfun(X_train), sd=0.5), ncol=1)

plot_grid <- matrix(seq(0, 1, length.out=101), ncol=1)

knn.reg(train=X_train, y=y_train, k=1, test=test_grid)$pred

}))

lines(test_grid, KNN_AVERAGE_PRED_K1, col="red4")

KNN_AVERAGE_PRED_K10 <- rowMeans(replicate(500, {

X_train <- matrix(runif(25, 0, 1), ncol=1)

y_train <- matrix(rnorm(25, mean=yfun(X_train), sd=0.5), ncol=1)

plot_grid <- matrix(seq(0, 1, length.out=101), ncol=1)

knn.reg(train=X_train, y=y_train, k=10, test=test_grid)$pred

}))

lines(test_grid, KNN_AVERAGE_PRED_K10, col="blue4")

We see here that, on average, KNN with \(K=1\) (red) basically gets the function just right - no bias!

On the other hand, because KNN with \(K=10\) smooths out the function, we see systematic errors (here oversmoothing). That’s some bias.

So which is better? - \(K=1\) - High variance, but low bias - \(K=10\) - Low variance, but high bias

We’ll have to look at some test error to see. For now, we’ll generate our test data exactly the same way as we generate our training data:

KNN_K1_ERROR <- replicate(500, {

X_train <- matrix(runif(25, 0, 1), ncol=1)

y_train <- matrix(rnorm(25, mean=yfun(X_train), sd=0.5), ncol=1)

plot_grid <- matrix(seq(0, 1, length.out=101), ncol=1)

# Generate from same model as before

X_test <- matrix(runif(25, 0, 1), ncol=1)

y_test <- matrix(rnorm(25, mean=yfun(X_train), sd=0.5), ncol=1)

y_hat <- knn.reg(train=X_train, y=y_train, k=1, test=X_test)$pred

err = y_test - y_hat

})

KNN_K1_MSE <- mean(rowMeans(KNN_K1_ERROR^2))

KNN_K10_ERROR <- replicate(500, {

X_train <- matrix(runif(25, 0, 1), ncol=1)

y_train <- matrix(rnorm(25, mean=yfun(X_train), sd=0.5), ncol=1)

plot_grid <- matrix(seq(0, 1, length.out=101), ncol=1)

# Generate from same model as before

X_test <- matrix(runif(25, 0, 1), ncol=1)

y_test <- matrix(rnorm(25, mean=yfun(X_train), sd=0.5), ncol=1)

y_hat <- knn.reg(train=X_train, y=y_train, k=10, test=X_test)$pred

err = y_test - y_hat

})

KNN_K10_MSE <- mean(rowMeans(KNN_K10_ERROR^2))

cbind(K1_MSE = KNN_K1_MSE,

K10_MSE = KNN_K10_MSE) K1_MSE K10_MSE

[1,] 1.954541 1.46504\(K=10\) does better overall!

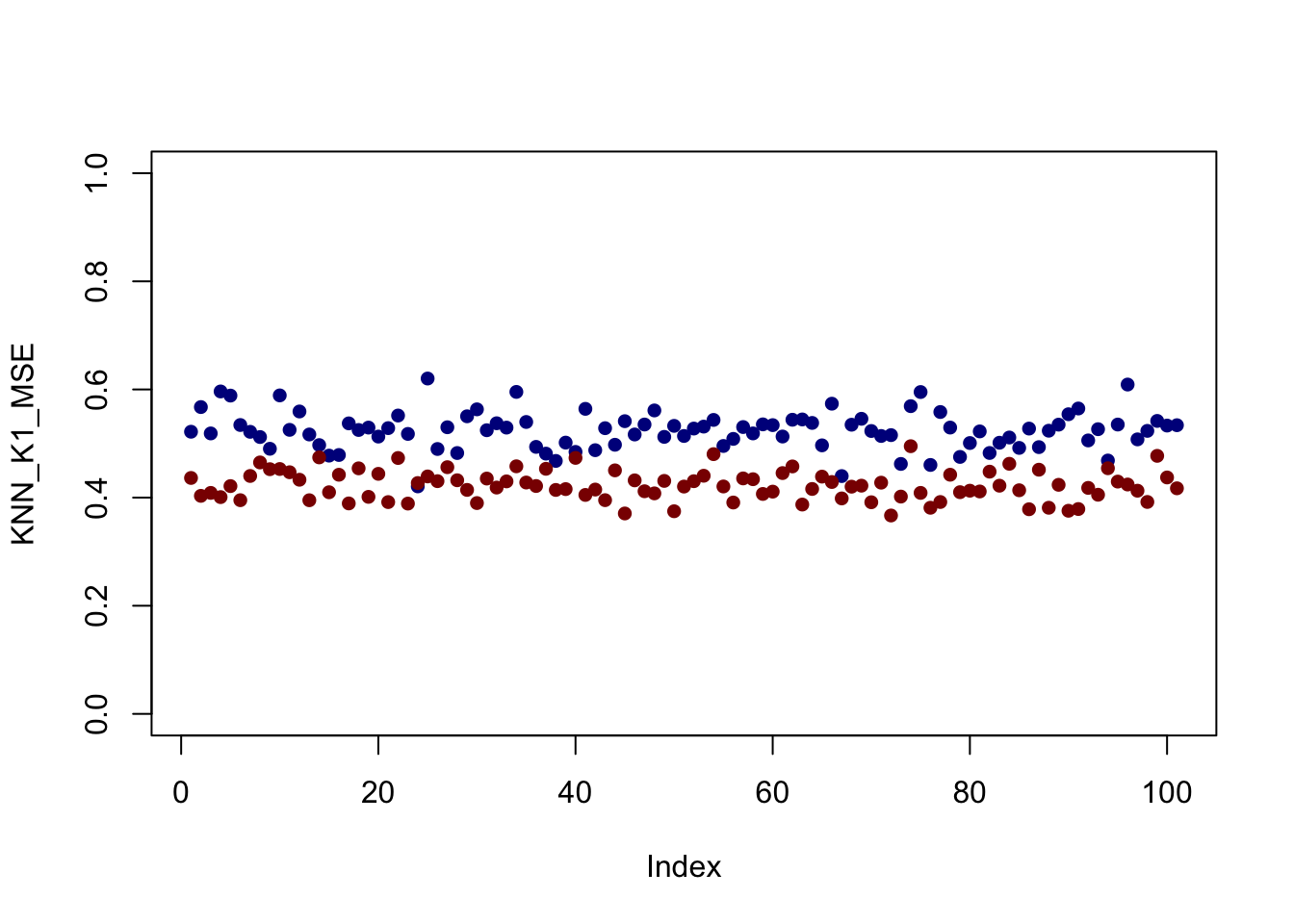

But does it do better everywhere or are some parts of the problem better for \(K=1\)?

Now we’ll be systematic in our test data - spacing it equally on the grid and computing ‘pointwise’ MSE:

KNN_K1_ERROR <- replicate(500, {

X_train <- matrix(runif(25, 0, 1), ncol=1)

y_train <- matrix(rnorm(25, mean=yfun(X_train), sd=0.5), ncol=1)

# Generate from same model as before

test_grid <- seq(0, 1, length.out=101)

X_test <- matrix(runif(test_grid, 0, 1), ncol=1)

y_test <- matrix(rnorm(test_grid, mean=yfun(X_test), sd=0.5), ncol=1)

y_hat <- knn.reg(train=X_train, y=y_train, k=1, test=X_test)$pred

err = y_test - y_hat

})

KNN_K1_MSE <- rowMeans(KNN_K1_ERROR^2)

KNN_K10_ERROR <- replicate(500, {

X_train <- matrix(runif(25, 0, 1), ncol=1)

y_train <- matrix(rnorm(25, mean=yfun(X_train), sd=0.5), ncol=1)

test_grid <- seq(0, 1, length.out=101)

X_test <- matrix(runif(test_grid, 0, 1), ncol=1)

y_test <- matrix(rnorm(101, mean=yfun(X_test), sd=0.5), ncol=1)

y_hat <- knn.reg(train=X_train, y=y_train, k=10, test=X_test)$pred

err = y_test - y_hat

})

KNN_K10_MSE <- rowMeans(KNN_K10_ERROR^2)

plot(KNN_K1_MSE, col="blue4", pch=16, ylim=c(0, 1))

points(KNN_K10_MSE, col="red4", pch=16)

It looks like - for this set up at least - \(K=10\) is better everywhere but that’s not always the case.

Play around with the size of the training data, the noise in the samples, and the data generating function (yfun) to see if you can get different behavior.

Also - is \(K=10\) really the optimal choice here? What would happen if we changed \(n\)?

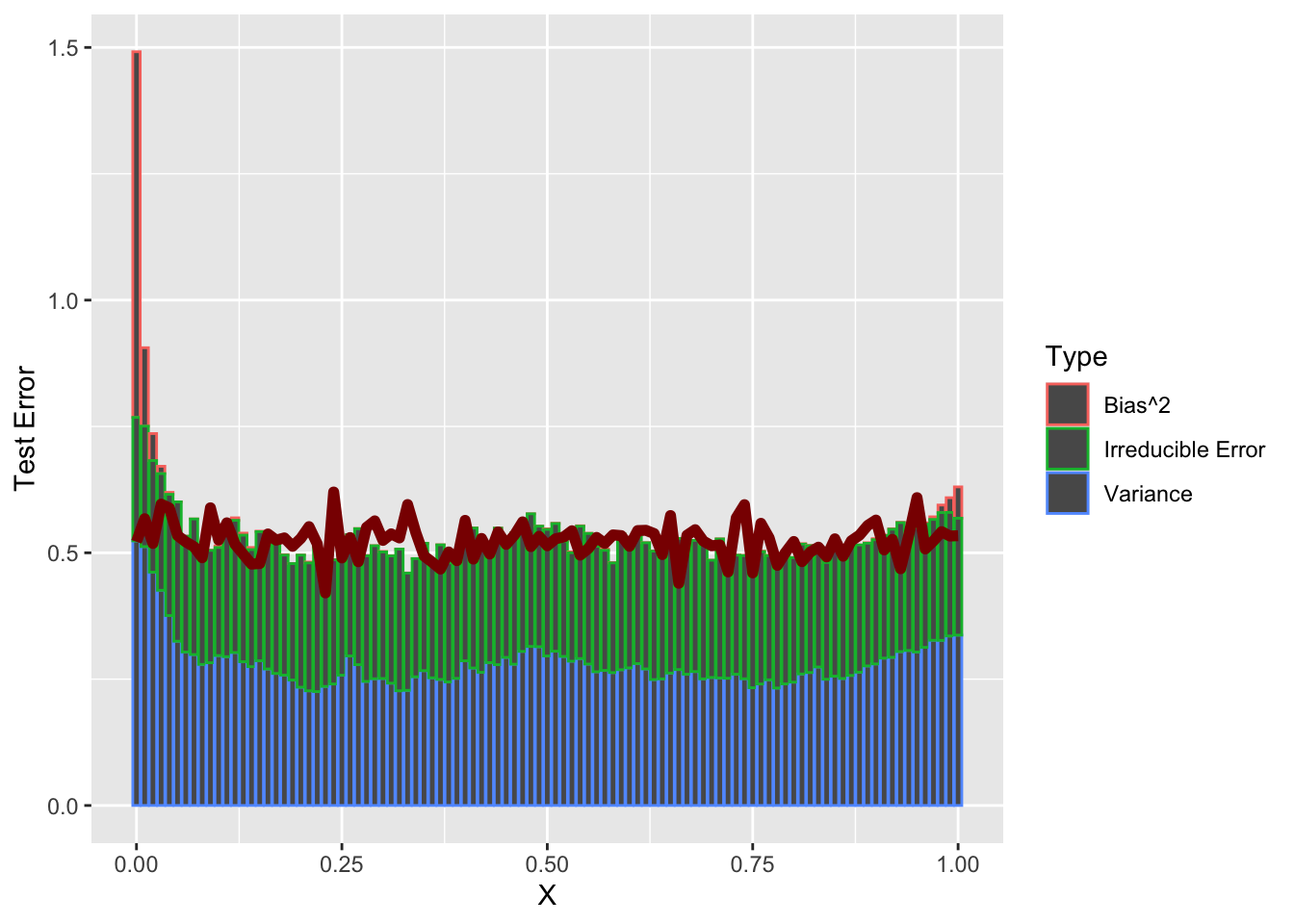

So, now that we have a good sense of (average) test error, can we verify our MSE decomposition?

Recall \[\begin{align*} \E[\text{MSE}] &= \text{Bias}^2 + \text{Variance} + \text{Irreducible Error} \\ \text{ where } \text{Bias}^2 &= \E\left[\left(\E[\hat{y}] - \E[y]\right)^2\right] \\ &= \left(\E[\hat{y}] - \E[y]\right)^2 \text{ (Why can I drop the outer expectation?)} \\ \text{Variance} &= \E\left[\left(\hat{y} - \E[\hat{y}]\right)^2\right] \\ \text{Irreducible Error} &= \E\left[\left(y - \E[y]\right)^2\right] \end{align*}\]

(Make sure you understand these definitions and how they work together!)

Let’s work these out using all the tools we built before.

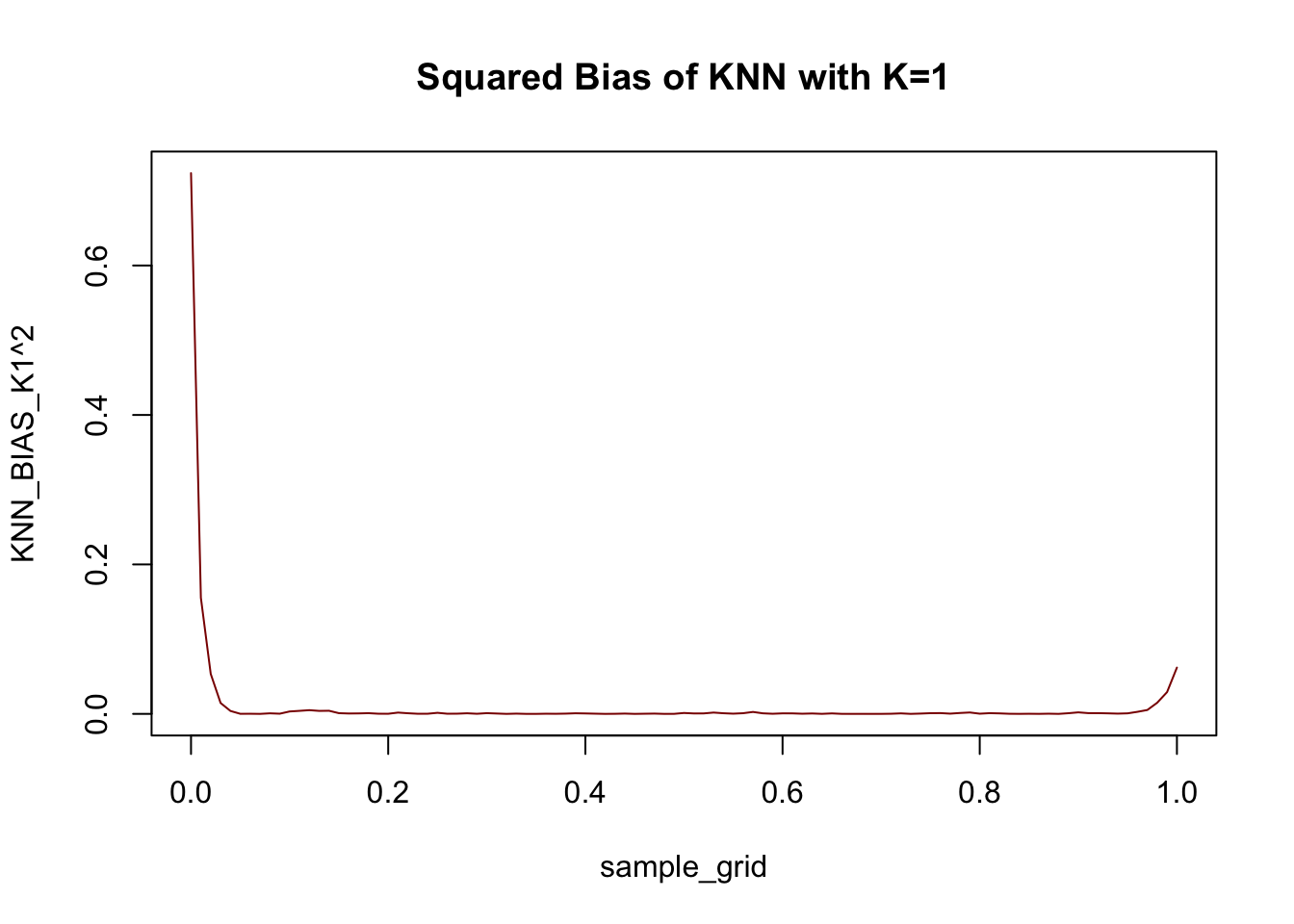

First, for the bias: - we already have \(\E[y]\) - this is just the yfun we selected - we can compute \(\E[\hat{y}]\) by running KNN many times and averaging the result

sample_grid <- matrix(seq(0, 1, length.out=101), ncol=1)

KNN_AVERAGE_PRED_K1 <- rowMeans(replicate(500, {

X_train <- matrix(runif(25, 0, 1), ncol=1)

y_train <- matrix(rnorm(25, mean=yfun(X_train), sd=0.5), ncol=1)

knn.reg(train=X_train, y=y_train, k=1, test=sample_grid)$pred

}))

KNN_BIAS_K1 <- KNN_AVERAGE_PRED_K1 - yfun(sample_grid)

plot(sample_grid, KNN_BIAS_K1^2, col="red4",

type="l", main="Squared Bias of KNN with K=1")

Not too much bias, but things do go a bit off the rails near the end points.

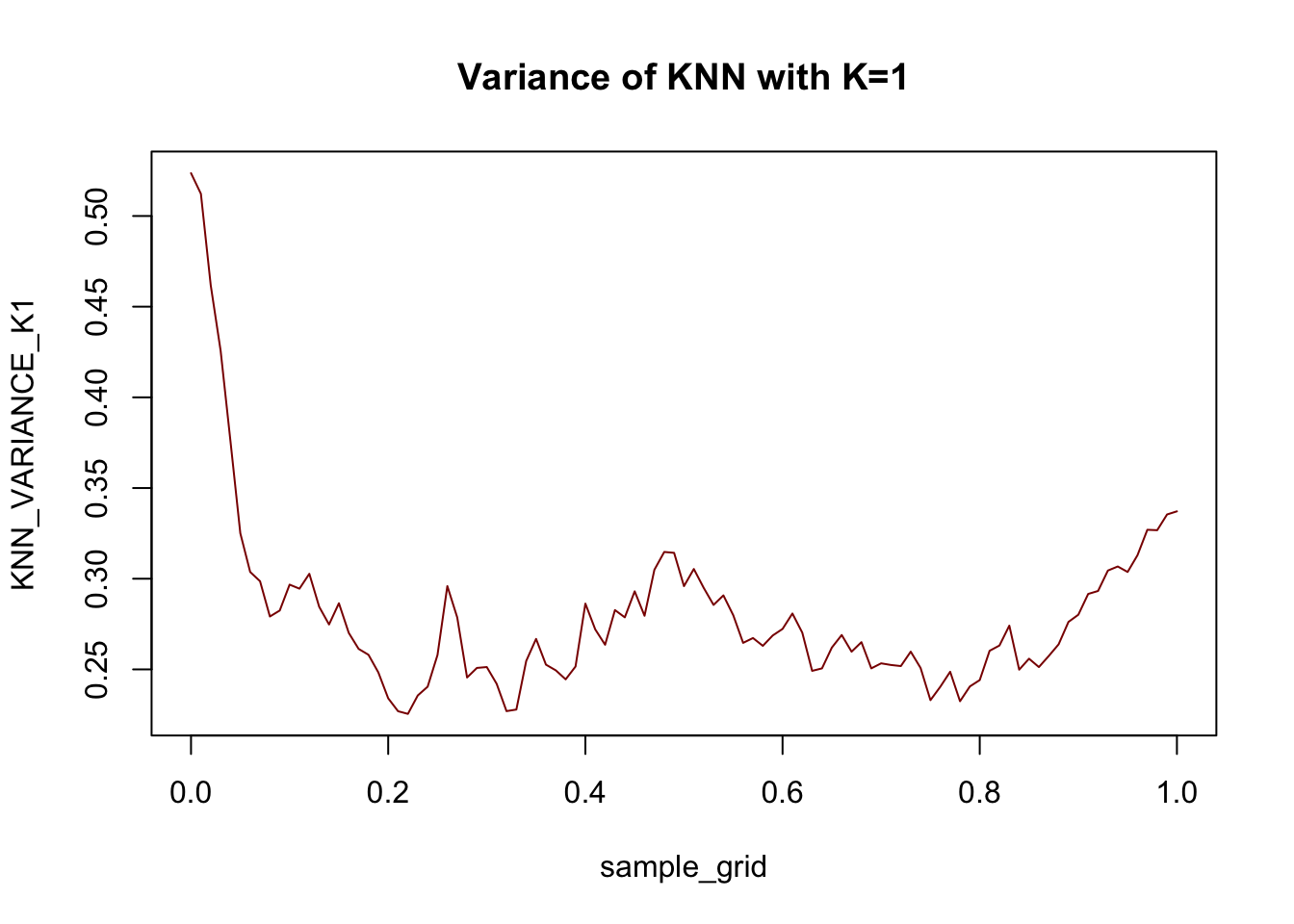

Next, we can compute variance pointwise:

sample_grid <- matrix(seq(0, 1, length.out=101), ncol=1)

KNN_VARIANCE_K1 <- rowMeans(replicate(500, {

X_train <- matrix(runif(25, 0, 1), ncol=1)

y_train <- matrix(rnorm(25, mean=yfun(X_train), sd=0.5), ncol=1)

(knn.reg(train=X_train, y=y_train, k=1, test=sample_grid)$pred - KNN_AVERAGE_PRED_K1)^2

}))

plot(sample_grid, KNN_VARIANCE_K1, col="red4",

type="l", main="Variance of KNN with K=1")

For this data at least, the variance term is generally larger than the bias term: this is what we expect with a very flexible (high variance + low bias) model like \(1\)-NN.

Finally, irreducible error is just 0.25 everywhere (recall \(y \sim \mathcal{N}(\E[y], 0.25)\)).

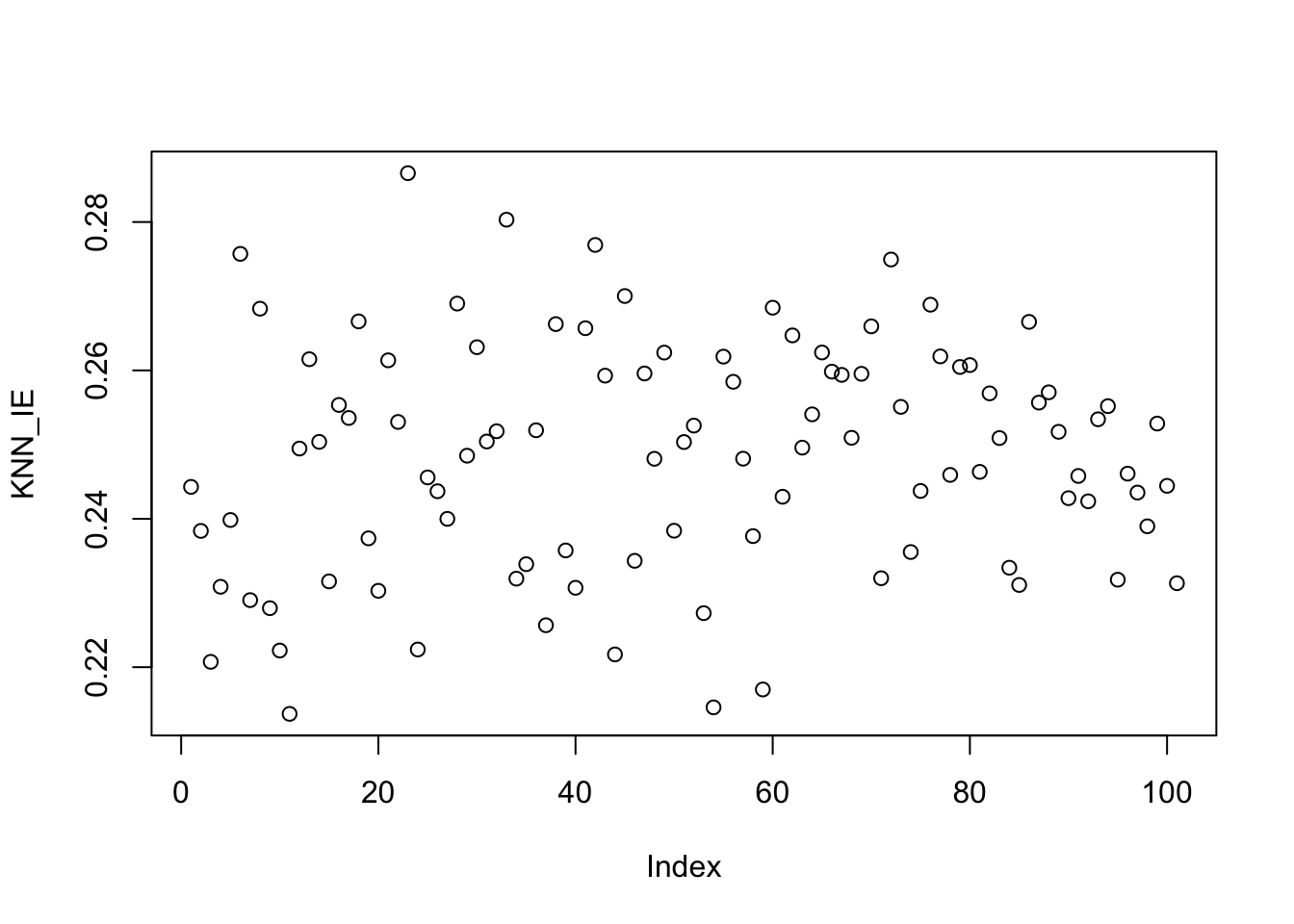

KNN_IE <- rowMeans(replicate(500, {

sample_grid <- matrix(seq(0, 1, length.out=101), ncol=1)

X_test <- matrix(sample_grid, ncol=1)

y_test <- matrix(rnorm(sample_grid, mean=yfun(X_test), sd=0.5), ncol=1)

y_best_pred <- matrix(yfun(X_test), ncol=1)

(as.vector(y_best_pred - y_test))^2

}))

plot(KNN_IE)

Put these together and we see the decomposition in action:

as compared to

mean(KNN_K1_MSE)[1] 0.5242858Visually,

library(dplyr)

library(tidyverse)

DECOMP_DATA <- data.frame(

sample_grid = sample_grid,

KNN_K1_BIAS2 = KNN_BIAS_K1^2,

KNN_K1_VARIANCE=KNN_VARIANCE_K1,

KNN_K1_IE = KNN_IE,

KNN_K1_MSE = KNN_K1_MSE) |>

pivot_longer(-sample_grid) |>

mutate(Error=value,

Type=case_when(

name=="KNN_K1_BIAS2" ~ "Bias^2",

name=="KNN_K1_IE" ~ "Irreducible Error",

name=="KNN_K1_VARIANCE" ~ "Variance",

name=="KNN_K1_MSE" ~ "Total Error"))

ggplot() +

geom_bar(data=DECOMP_DATA |> filter(Type != "Total Error"),

mapping=aes(x=sample_grid, y=Error, color=Type),

stat="identity") +

geom_line(data=DECOMP_DATA |> filter(Type == "Total Error"),

mapping=aes(x=sample_grid, y=Error),

color="red4", linewidth=2) +

xlab("X") + ylab("Test Error")

So a bit of weirdness at the left end point - but holds up as well as we might expect for \(N=25\) samples.

How do the relative magnitudes of these terms change as you adjust the parameters of the simulation?

Introduction to Convex Optimization

In this course, we will frequently want to find the minimizer of certain functions. Typically, these will arise as ERMs and the function will take a \(p\)-vector of inputs, e.g. regression coefficients, and produce a scalar loss output. Let us generally call this function \(f: \R^p \to \R\).

In some circumstances, we can take the derivative of \(f\), typically called the gradient in this context, and set it equal to zero. For example, suppose we want to minimize an expression of the form:

\[f(\bx) = \frac{1}{2}\bx^{\top}\bA\bx + \bb^{\top}\bx + c\]

where \(\bA\) is a symmetric strictly positive-definite matrix, \(\bb\) is an arbitrary \(p\)-vector, and \(c\) is a constant. Taking the gradient, we find

\[ \frac{\partial f}{\partial \bx} = \bA\bx + \bb\]

We set this to zero and find a crticial point at:

\[\begin{align*} \mathbf{0} &= \bA\bx + \bb \\ - \bb &= \bA\bx \\ \implies \bx &= -\bA^{-1}\bb \end{align*}\]

assuming that \(\bA\) is invertible. (Here, invertibility is implied by assuming \(\bA\) is strictly positive-definite.) As usual, we are not quite done here, as we must also check that this is a minimizer and not a maximizer or a saddle point. To do so, we take the second derivative to find

\[ \frac{\partial}{\partial \bx}\frac{\partial f}{\partial \bx} = \bA\]

In this multivariate context, we need the second derivative, a.k.a the Hessian, to be strictly positive-definite to guarantee that we have found a minimizer and this is indeed exactly what we assumed above. As discussed above, ‘definiteness’ plays the role of sign for many applications of matrix-ness. Here, by assuming strictly positive definite, we are essentially treating the matrix as strictly positive (\(>0\)), which is exactly the condition we need to guarantee a minimizer in the scalar case as well.

Hence, we have that the one minimizer of \(f\) is found at \(\bx_* = \bA^{-1}\bb\). Compare this to the scalar case of minimizing a quadratic \(\frac{1}{2}ax^2 + bx + c\) with minimizer at \(x = -b/a\).2

The above analysis works well, but it is essentially the only minimization we will be able to do in closed form in this course.3

For other functions, we will need to apply optimization: the mathematical toolkit for finding minimizers (or maximizers) of functions. Fortunately for us, many of the methods we begin this course with fall in the realm of convex optimization, a particularly nice branch of optimization.

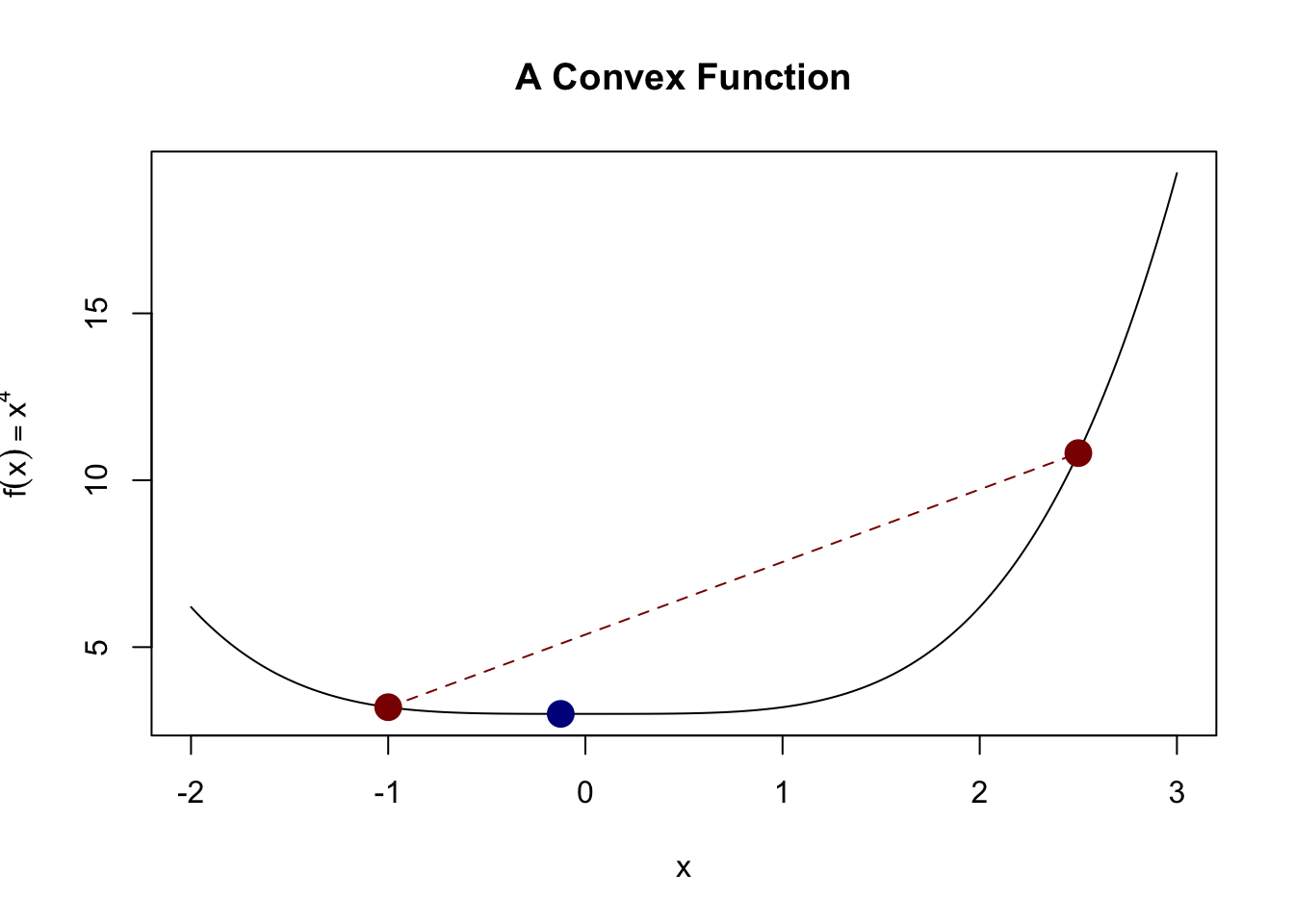

Convex Optimization refers to the problem of minimizing convex functions over convex sets. Let’s define both of these:

-

A convex function is one which satisfies this inequality at all points: \[f(\lambda \bx + (1-\lambda)\by) \leq \lambda f(\bx) + (1-\lambda) f(\by)\]

for any \(\bx, \by\) and any \(\lambda \in [0, 1]\).

This definition is a bit non-intuitive, but it basically implies that we have a “bowl-like” function. This definition captures the idea that the actual function value is always less than we might get from linear interpolation. A picture is helpful here:

Here, we see that the actual value of the function (blue dot) is less than what we would get if we interpolated the two red points (red line).

An alternative definition is that if \(f\) is twice-differentiable, its Hessian (2nd derivative matrix) is positive semi-definite.

-

A convex set is one that allows us to look “between” points. Specifically, a set \(\mathcal{C} \subseteq \R^p\) is convex if \[\bx, \by \in \mathcal{C} \implies \lambda \bx + (1-\lambda)\by \in \mathcal{C}\]

for all \(\bx, \by \in \mathcal{C}\) and any \(\lambda \in [0, 1]\).

Clearly these are related by the idea of looking “between” two points:

- Convex functions guarantee that this will produce something lower than naive interpolation; and

- Convex sets guarantee that the midpoint will be “allowable”.

To tie these together, note another alternative characterization of a convex function: one whose epigraph (the area above the curve in the plot) is a convex set.

For optimization purposes, these two properties imply a rather remarkable fact:

If \(\bx_0\) is a local minimum of \[\min_{\bx \in \mathcal{C}} f(\bx)\], then it is a global minimum.

This is quite shocking: if we find a point where we can’t improve by going in any direction, we are guaranteed to have found a global minimum and no point could be better. (It is possible to have multiple minimizers however: consider \(f(\bx) = 0\). Any choice of \(\bx\) is a global minimizer.) This lets us turn the “global” search problem into a “local” one.

Of course, this only helps us if we can find a local minimizer of \(f\). How might we do so? Let’s recall a basic calculus idea: the gradient of a function is a vector that points in the direction of greatest increase (the “steepest” uphill). So if we go in the opposite direction of the gradient, we actually will go “downhill”. In fact, this is basically all we need to start applying gradient descent.

Gradient Descent: Given a convex function \(f\):

- Initialize at (arbitrary) starting point \(\bx_0\).

- Initialize step counter \(k=0\).

- Repeat until convergence:

- Compute the gradient of \(f\) at \(\bx_k\): \(\left.\nabla f\right.|_{\bx=\bx_k}\)

- Set \(\bx_{k+1} = \bx_k - c \left.\nabla f\right.|_{\bx=\bx_k}\)

Repeated infinitely many times, this will converge to a local, and hence global, minimizer of \(f\). There are many variants of this basic idea4, mostly related to selecting the optimal step size \(c\), but this is the most important algorithm in convex optimization and it is important to understand it deeply.

We can apply it here to the 3D function \[f(\bx) = (x_1-2)^2 + (x_2-3)^2 + (x_3 - 4)^2.\] Clearly, we can see that the minimizer has to be at \((2, 3, 4)\), but our algorithm won’t use that fact.

Before we can implement this algorithm, we need the gradient, which is given by

\[f(\bx) = \left\|\bx - (2,3,4)^{\top}\right\|_2^2 \implies \nabla f = 2[\bx - (2, 3, 4)^{\top}]\]

x <- matrix(c(0, 0, 0), ncol=1) # We want to work exclusively with column vecs

converged <- FALSE

c <- 0.001 # Small step size

f <- function(x) sum((x - c(2, 3, 4))^2)

grad <- function(x) 2 * (x - matrix(c(2, 3, 4), ncol=1))

while(!converged){

g <- grad(x)

x_new <- x - c * g

if(sum(abs(x - x_new)) < 1e-5){

converged <- TRUE

}

x <- x_new

}

x [,1]

[1,] 1.998893

[2,] 2.998340

[3,] 3.997787We don’t get the exact minimizer, but we certainly get something ‘close enough’ for our purposes. In Report #01, you will use gradient descent and some variants to study the least squares problem.

Often, we will want to see how the value of \(f(\bx_k)\) changes over the course of the optimization. We expect that it will go down monotonically, but it may not be worth continuing the optimization if we have reached a point of ‘diminishing returns.’ You can do this by hand (evaluating \(f\) after each update and storing the results), but many optimizers will often track this automatically for you: e.g. TensorBoard.5

Footnotes

The Bias-Variance decomposition (and tradeoff) holds approximately for other loss functions, though the math is only this nice for MSE.↩︎

Note that we can only minimize this quadratic in the case where \(a\) is strictly positive so the parabola is upward facing. This is the scalar equivalent of the strict positive-definiteness condition we put on \(\bA\).↩︎

OLS and some basic variants (ridge regression) can be written in this ‘quadratic’ style. Can you see why?↩︎

For instance, of the 14 optimization algorithms included in base

pytorch, all but one are advanced versions of gradient descent. The exception isLBFGSwhich attempts to (approximately) use both the gradient and the Hessian (second derivative); computing the Hessian is normally quite expensive, soLBFGSuses some clever tricks to approximate the Hessian.↩︎-

Modern ML toolkits like

pytorchorTensorFloware (at heart) fancy systems to do two things automatically that we did ‘by hand’ in this example:- Compute gradients via a process known as ‘back-propogation’ or ‘autodiff’

- Implement (fancy) gradient descent