if(!dir.exists(file.path("data", "mp02"))){

dir.create(file.path("data", "mp02"), showWarnings=FALSE, recursive=TRUE)

}

library <- function(pkg){

## Mask base::library() to automatically install packages if needed

## Masking is important here so downlit picks up packages and links

## to documentation

pkg <- as.character(substitute(pkg))

options(repos = c(CRAN = "https://cloud.r-project.org"))

if(!require(pkg, character.only=TRUE, quietly=TRUE)) install.packages(pkg)

stopifnot(require(pkg, character.only=TRUE, quietly=TRUE))

}

library(tidyverse)

library(glue)

library(readxl)

library(tidycensus)

get_acs_all_years <- function(variable, geography="cbsa",

start_year=2009, end_year=2023){

fname <- glue("{variable}_{geography}_{start_year}_{end_year}.csv")

fname <- file.path("data", "mp02", fname)

if(!file.exists(fname)){

YEARS <- seq(start_year, end_year)

YEARS <- YEARS[YEARS != 2020] # Drop 2020 - No survey (covid)

ALL_DATA <- map(YEARS, function(yy){

tidycensus::get_acs(geography, variable, year=yy, survey="acs1") |>

mutate(year=yy) |>

select(-moe, -variable) |>

rename(!!variable := estimate)

}) |> bind_rows()

write_csv(ALL_DATA, fname)

}

read_csv(fname, show_col_types=FALSE)

}

# Household income (12 month)

INCOME <- get_acs_all_years("B19013_001") |>

rename(household_income = B19013_001)

# Monthly rent

RENT <- get_acs_all_years("B25064_001") |>

rename(monthly_rent = B25064_001)

# Total population

POPULATION <- get_acs_all_years("B01003_001") |>

rename(population = B01003_001)

# Total number of households

HOUSEHOLDS <- get_acs_all_years("B11001_001") |>

rename(households = B11001_001)STA 9750 Mini-Project #02: Making Backyards Affordable for All

Due Dates

- Released to Students: 2025-09-30

- Initial Submission: 2025-10-31 11:59pm ET on GitHub and Brightspace

-

Peer Feedback:

- Peer Feedback Assigned: 2025-11-03 on GitHub

- Peer Feedback Due: 2025-11-14 11:59pm ET on GitHub

Estimated Time to Complete: 9 Hours

Estimated Time for Peer Feedback: 1 Hour

Introduction

Housing affordability is one of the greatest challenges facing New York City today. Your instructor, a Houstonian by birth and affection, is a firm believer in the power of permissive zoning practices to reduce housing costs and to make cities more diverse, affordable, and dynamic. He proudly stands with the forces of YIMBYism, standing athwart local NIMBYs and shouting “build!” In this mini-project, we will identify America’s most “YIMBY” cities using a variety of census data sources and real estate indices. This project is inspired by a similar project from the YouTuber Ray Delahanty, a.k.a. CityNerd, which you can view here:

Note that our project is similar to CityNerd’s but our analysis is somewhat simplified, uses different techniques and data sources, and you should not expect your results to match perfectly.

Below, you will use the results of your analysis to lobby politicians in support of a federal YIMBY-incentive program. In particular, you will prepare a short policy brief-a sort of written elevator pitch-that is designed to help you find congressional representatives whose districts would benefit from adopting YIMBY-type policies and whose reelection prospects would be improved by sponsoring this type of bill. The political analysis here is a bit superficial, but it should give you a taste of how you might use different data analytic techniques to tailor an argument to a specific audience.

In this mini-project, you will:

- Practice use of

dplyrfor combining and manipulating data from distinct sources - Practice use of

ggplot2to create compelling statistical visualizations. Note that this assignment only requires “basic”ggplot2visualizations like scatterplots or line graphs; Mini-Project #03 will require more complex visualization. - Practice summarizing complex analytical pipelines for non-technical audiences.

As with Mini-Project #01, a major portion of your grade is based on the communication and writing elements of this mini-project, so make sure to budget sufficient time for the writing process. Code without context has little practical value - you are always writing and conveying your analysis to a specific target audience.

Student Responsbilities

Recall our basic analytic workflow and table of student responsibilities:

- Data Ingest and Cleaning: Given a single data source, read it into

Rand transform it to a reasonably useful standardized format. - Data Combination and Alignment: Combine multiple data sources to enable insights not possible from a single source.

- Descriptive Statistical Analysis: Take a data table and compute informative summary statistics from both the entire population and relevant subgroups

- Data Visualization: Generate insightful data visualizations to spur insights not attainable from point statistics

- Inferential Statistical Analysis and Modeling: Develop relevant predictive models and statistical analyses to generate insights about the underlying population and not simply the data at hand.

| Ingest and Cleaning | Combination and Alignment | Descriptive Statistical Analysis | Visualization | |

|---|---|---|---|---|

| Mini-Project #01 | ✓ | |||

| Mini-Project #02 | ✓ | ✓ | ½ | |

| Mini-Project #03 | ½ | ✓ | ✓ | ✓ |

| Mini-Project #04 | ✓ | ✓ | ✓ | ✓ |

In this mini-project, you are mainly responsible for data alignment and basic statistical analyses. While not the main focus of this mini-project, you are also expected to provide basic data visualizations to support your findings; the grading rubric, below, emphasizes the dplyr tools used in this project, but reports without any visualization will be penalized severely. Note that data visualization will play a larger role in Mini-Project #03.

As before, I will provide code to automatically download and read in the data used for this project. Also note that, as compared with Mini-Project #01, I am providing less ‘scaffolding’: students are more responsible for directing their own analyses.

Rubric

STA 9750 Mini-Projects are evaluated using peer grading with meta-review by the course GTAs. The following rubric will be used for this mini-project:

| Course Element | Excellent (9-10) | Great (7-8) | Good (5-6) | Adequate (3-4) | Needs Improvements (1-2) |

|---|---|---|---|---|---|

| Written Communication | Report is well-written and flows naturally. Motivation for key steps is clearly explained to reader without excessive detail. Key findings are highlighted and appropriately given context. | Report has no grammatical or writing issues. Writing is accessible and flows naturally. Key findings are highlighted, but lack suitable motivation and context. | Report has no grammatical or writing issues. Key findings are present but insufficiently highlighted. | Writing is intelligible, but has some grammatical errors. Key findings are obscured. | Report exhibits significant weakness in written communication. Key points are difficult to discern. |

| Project Skeleton | Code completes all instructor-provided tasks correctly. Responses to open-ended tasks are particularly insightful and creative. | Code completes all instructor-provided tasks satisfactorily. | Response to one instructor provided task is skipped, incorrect, or otherwise incomplete. | Responses to two instructor provided tasks are skipped, incorrect, or otherwise incomplete. | Response to three or more instructor provided tasks are skipped, incorrect, or otherwise incomplete. |

Formatting & Display |

Tables have well-formatted column names, suitable numbers of digits, and attractive presentation. Figures are ‘publication-quality,’ with suitable axis labels, well-chosen structure, attractive color schemes, titles, subtitles, and captions, etc. |

Tables are well-formatted, but still have room for improvement. Figures are above ‘exploratory-quality,’ but do not reach full ‘publication-quality.’ |

Tables lack significant ‘polish’ and need improvement in substance (filtering and down-selecting of presented data) or style. Figures are suitable to support claims made, but are ‘exploratory-quality,’ reflecting minimal effort to customize and ‘polish’ beyond |

Unfiltered ‘data dump’ instead of curated table. Baseline figures that do not fully support claims made. |

Report lacks basic tables; OR report lacks basic figures. |

Code Quality |

Code is (near) flawless. Code passes all |

Comments give context of the analysis, not simply defining functions used in a particular line. |

Code has well-chosen variable names and basic comments. |

Code executes properly, but is difficult to read. |

Code fails to execute properly. |

Data Preparation |

Automatic (10/10) if mini-project submitted includes and runs instructor-provided code. Out of scope for this mini-project. |

For this mini-project, no more than 5 total points of extra credit can be be awarded. Opportunities for extra credit exist for students who go above and beyond the instructor-provided scaffolding. Specific opportunities for extra credit can be found below.

Students pursuing careers in data analytics are strongly encouraged to go beyond the strict ambit of the mini-projects to

- further refine their skills;

- learn additional techniques that can be used in the final course project; and

- develop a more impressive professional portfolio.

Because students are encouraged to use STA 9750 mini-projects as the basis for a professional portfolio, the basic skeleton of each project will be released under a fairly permissive usage license. Take advantage of it!

Submission Instructions

After completing the analysis, write up your findings, showing all of your code, using a dynamic quarto document and post it to your course repository. The qmd file should be named mp02.qmd (lower case!) so the rendered document can be found at docs/mp02.html in the student’s repository and will be served at the URL:1

https://<GITHUB_ID>.github.io/STA9750-2025-FALL/mp02.html

You can use the helper function mp_start available at in the Course Helper Functions to create a file with the appropriate name and some meta-data already included. Do so by running the following command at the R Console:

source("https://michael-weylandt.com/STA9750/load_helpers.R"); mp_start(N=02)

After completing this mini-project, upload your rendered output and necessary ancillary files to GitHub to make sure your site works. The mp_submission_ready function in the Course Helper Functions can perform some of these checks automatically. You can run this function by running the following commands at the R Console:

source("https://michael-weylandt.com/STA9750/load_helpers.R"); mp_submission_ready(N=02)

Once you confirm this website works (substituting <GITHUB_ID> for the actual GitHub username provided to the professor in MP#00 of course), open a GitHub issue on the instructor’s repository to submit your completed work.

The easiest way to do so is by use of the mp_submission_create function in the Course Helper Functions, which can be used by running the following command at the R Console:

source("https://michael-weylandt.com/STA9750/load_helpers.R"); mp_submission_create(N=02)

Alternatively, if you wish to submit manually, open a new issue at

https://github.com/michaelweylandt/STA9750-2025-FALL/issues/new.

Title the issue STA 9750 <GITHUB_ID> MiniProject #02 and fill in the following text for the issue:

Hi @michaelweylandt!

I've uploaded my work for MiniProject #**02** - check it out!

<https://<GITHUB_ID>.github.io/STA9750-2025-FALL/mp02.html>Once the submission deadline passes, the instructor will tag classmates for peer feedback in this issue thread.

Additionally, a PDF export of this report should be submitted on Brightspace. To create a PDF from the uploaded report, simply use your browser’s ‘Print to PDF’ functionality.

NB: The analysis outline below specifies key tasks you need to perform within your write up. Your peer evaluators will check that you complete these. You are encouraged to do extra analysis, but the bolded Tasks are mandatory.

NB: Your final submission should look like a report, not simply a list of facts answering questions. Add introductions, conclusions, and your own commentary. You should be practicing both raw coding skills and written communication in all mini-projects. There is little value in data points stated without context or motivation.

Mini-Project #02: Making Backyards Affordable for All

Data Acquisition

Incorporate the instructor provided code below into your report. As you do so, make sure to look for possible “keys” on which you will be able to join datasets together.

We will focus on data from the US Census Bureau. In addition to the Constitutionally-mandated decennial census, the Census Bureau performs a variety of ongoing surveys. The most famous of these is the American Community Survey (ACS) which monitors a variety of household demographic and economic indicators constantly. The census data is a bit complicated to wrangle so we will use the tidycensus package to download what we need.

We will also need the number of new housing units built each year. This data is not available using tidycensus, but we can download and prepare it ‘manually’:

get_building_permits <- function(start_year = 2009, end_year = 2023){

fname <- glue("housing_units_{start_year}_{end_year}.csv")

fname <- file.path("data", "mp02", fname)

if(!file.exists(fname)){

HISTORICAL_YEARS <- seq(start_year, 2018)

HISTORICAL_DATA <- map(HISTORICAL_YEARS, function(yy){

historical_url <- glue("https://www.census.gov/construction/bps/txt/tb3u{yy}.txt")

LINES <- readLines(historical_url)[-c(1:11)]

CBSA_LINES <- str_detect(LINES, "^[[:digit:]]")

CBSA <- as.integer(str_sub(LINES[CBSA_LINES], 5, 10))

PERMIT_LINES <- str_detect(str_sub(LINES, 48, 53), "[[:digit:]]")

PERMITS <- as.integer(str_sub(LINES[PERMIT_LINES], 48, 53))

data_frame(CBSA = CBSA,

new_housing_units_permitted = PERMITS,

year = yy)

}) |> bind_rows()

CURRENT_YEARS <- seq(2019, end_year)

CURRENT_DATA <- map(CURRENT_YEARS, function(yy){

current_url <- glue("https://www.census.gov/construction/bps/xls/msaannual_{yy}99.xls")

temp <- tempfile()

download.file(current_url, destfile = temp, mode="wb")

fallback <- function(.f1, .f2){

function(...){

tryCatch(.f1(...),

error=function(e) .f2(...))

}

}

reader <- fallback(read_xlsx, read_xls)

reader(temp, skip=5) |>

na.omit() |>

select(CBSA, Total) |>

mutate(year = yy) |>

rename(new_housing_units_permitted = Total)

}) |> bind_rows()

ALL_DATA <- rbind(HISTORICAL_DATA, CURRENT_DATA)

write_csv(ALL_DATA, fname)

}

read_csv(fname, show_col_types=FALSE)

}

PERMITS <- get_building_permits()For this project, we will work at the level of Core-Based Statistical Areas or CBSAs. CBSAs are essentially the metropolitan (or micropolitan) area surrounding a central town. For example, many of you may live in the NYC CBSA, which corresponds to the “New York-Newark-Jersey City, NY-NJ Metropolitan Statistical Area”, but if you commute from north of the city like your instructor you may live in the Poughkeepsie CBSA, the full name of which is the “Poughkeepsie-Newburgh-Middletown, NY Metropolitan Statistical Area”. CBSAs are a unification of the older system of Metropolitan Statistical Areas (MSAs) and Micropolitan Statistical Areas (μSAs) but are finer-resolution than Combined Statistical Areas (CSAs).

Finally, we know that the best CBSA to live in may depend on your career, so it will be useful to acquire income estimates from the Bureau of Labor Statistics (BLS).

The BLS records data using the North American Industry Classification System (NAICS) coding system. The following code will download the latest NAICS data schema and manipulate it into a format suitable for analysis.

library(httr2)

library(rvest)

get_bls_industry_codes <- function(){

fname <- file.path("data", "mp02", "bls_industry_codes.csv")

library(dplyr)

library(tidyr)

library(readr)

if(!file.exists(fname)){

resp <- request("https://www.bls.gov") |>

req_url_path("cew", "classifications", "industry", "industry-titles.htm") |>

req_headers(`User-Agent` = "Mozilla/5.0 (Macintosh; Intel Mac OS X 10.15; rv:143.0) Gecko/20100101 Firefox/143.0") |>

req_error(is_error = \(resp) FALSE) |>

req_perform()

resp_check_status(resp)

naics_table <- resp_body_html(resp) |>

html_element("#naics_titles") |>

html_table() |>

mutate(title = str_trim(str_remove(str_remove(`Industry Title`, Code), "NAICS"))) |>

select(-`Industry Title`) |>

mutate(depth = if_else(nchar(Code) <= 5, nchar(Code) - 1, NA)) |>

filter(!is.na(depth))

# These were looked up manually on bls.gov after finding

# they were presented as ranges. Since there are only three

# it was easier to manually handle than to special-case everything else

naics_missing <- tibble::tribble(

~Code, ~title, ~depth,

"31", "Manufacturing", 1,

"32", "Manufacturing", 1,

"33", "Manufacturing", 1,

"44", "Retail", 1,

"45", "Retail", 1,

"48", "Transportation and Warehousing", 1,

"49", "Transportation and Warehousing", 1

)

naics_table <- bind_rows(naics_table, naics_missing)

naics_table <- naics_table |>

filter(depth == 4) |>

rename(level4_title=title) |>

mutate(level1_code = str_sub(Code, end=2),

level2_code = str_sub(Code, end=3),

level3_code = str_sub(Code, end=4)) |>

left_join(naics_table, join_by(level1_code == Code)) |>

rename(level1_title=title) |>

left_join(naics_table, join_by(level2_code == Code)) |>

rename(level2_title=title) |>

left_join(naics_table, join_by(level3_code == Code)) |>

rename(level3_title=title) |>

select(-starts_with("depth")) |>

rename(level4_code = Code) |>

select(level1_title, level2_title, level3_title, level4_title,

level1_code, level2_code, level3_code, level4_code) |>

drop_na() |>

mutate(across(contains("code"), as.integer))

write_csv(naics_table, fname)

}

read_csv(fname, show_col_types=FALSE)

}

INDUSTRY_CODES <- get_bls_industry_codes()Finally, the BLS Quarterly Census of Employment and Wages

library(httr2)

library(rvest)

get_bls_qcew_annual_averages <- function(start_year=2009, end_year=2023){

fname <- glue("bls_qcew_{start_year}_{end_year}.csv.gz")

fname <- file.path("data", "mp02", fname)

YEARS <- seq(start_year, end_year)

YEARS <- YEARS[YEARS != 2020] # Drop Covid year to match ACS

if(!file.exists(fname)){

ALL_DATA <- map(YEARS, .progress=TRUE, possibly(function(yy){

fname_inner <- file.path("data", "mp02", glue("{yy}_qcew_annual_singlefile.zip"))

if(!file.exists(fname_inner)){

request("https://www.bls.gov") |>

req_url_path("cew", "data", "files", yy, "csv",

glue("{yy}_annual_singlefile.zip")) |>

req_headers(`User-Agent` = "Mozilla/5.0 (Macintosh; Intel Mac OS X 10.15; rv:143.0) Gecko/20100101 Firefox/143.0") |>

req_retry(max_tries=5) |>

req_perform(fname_inner)

}

if(file.info(fname_inner)$size < 755e5){

warning(sQuote(fname_inner), "appears corrupted. Please delete and retry this step.")

}

read_csv(fname_inner,

show_col_types=FALSE) |>

mutate(YEAR = yy) |>

select(area_fips,

industry_code,

annual_avg_emplvl,

total_annual_wages,

YEAR) |>

filter(nchar(industry_code) <= 5,

str_starts(area_fips, "C")) |>

filter(str_detect(industry_code, "-", negate=TRUE)) |>

mutate(FIPS = area_fips,

INDUSTRY = as.integer(industry_code),

EMPLOYMENT = as.integer(annual_avg_emplvl),

TOTAL_WAGES = total_annual_wages) |>

select(-area_fips,

-industry_code,

-annual_avg_emplvl,

-total_annual_wages) |>

# 10 is a special value: "all industries" , so omit

filter(INDUSTRY != 10) |>

mutate(AVG_WAGE = TOTAL_WAGES / EMPLOYMENT)

})) |> bind_rows()

write_csv(ALL_DATA, fname)

}

ALL_DATA <- read_csv(fname, show_col_types=FALSE)

ALL_DATA_YEARS <- unique(ALL_DATA$YEAR)

YEARS_DIFF <- setdiff(YEARS, ALL_DATA_YEARS)

if(length(YEARS_DIFF) > 0){

stop("Download failed for the following years: ", YEARS_DIFF,

". Please delete intermediate files and try again.")

}

ALL_DATA

}

WAGES <- get_bls_qcew_annual_averages()These data sources are rather messy - especially the building permit files - but the above code should work without manual intervention. Please contact the instructor on the course discussion platform if you have issues.

Some of these files are large, especially the QCEW data, and may take a bit of time to download and initially process. You are strongly encouraged to do this step as soon as possible so that you can seek help from the instructor if necessary.

If you receive an error message about a zip file being corrupted during download, please delete that file and try again. This error is usually transient.

The QCEW data is rather large and totals about 1.1G raw, before we down sample and compress into a single file. Once you have run this code once and created data/mp02/bls_qcew_2009_2023.csv.gz you can delete all of the annual qcew_annual_singlefile.zip files to save space.

Data Integration and Initial Exploration

Before continuing, you may wish to complete Extra Credit Opportunity #01 below.

Now that we have all of our data downloaded and cleaned up, let’s perform some initial analysis to familiarize ourselves with the data. These questions are not necessarily relevant to your final findings, though they may inspire some later thoughts. These are principally designed to help you build fluency with the tables and to make sure you know what data you have access to.

Using the functions of the dplyr package, answer the following questions. For most of these questions, you will need to join (at least) two tables together.

Which CBSA (by name) permitted the largest number of new housing units in the decade from 2010 to 2019 (inclusive)?

-

In what year did Albuquerque, NM (CBSA Number 10740) permit the most new housing units?

Hint: There is a Covid-19 data artifact here that may trip you up if you do not look at your answer closely.

-

Which state (not CBSA) had the highest average individual income in 2015? To answer this question, you will need to first compute the total income per CBSA by multiplying the average household income by the number of households, and then sum total income and total population across all CBSAs in a state. With these numbers, you can answer this question.

Hint: The following function can be used to extract the principal state for a CBSA. Some CBSAs split across multiple states, e.g., the NYC CBSA continuing into NJ and CT, so this picks the one that appears first in the name.

... |> mutate(state = str_extract(NAME, ", (.{2})", group=1))You can also construct a data frame that connects state abbreviations to full names as follows:

state_df <- data.frame(abb = c(state.abb, "DC", "PR"), name = c(state.name, "District of Columbia", "Puerto Rico")) -

Data scientists and business analysts are recorded under NAICS code

5182. What is the last year in which the NYC CBSA had the most data scientists in the country? In recent, the San Francisco CBSA has had the most data scientists.For this question, you may simply create a table of which CBSA had the most data scientists each year and then answer the question in the following text.

Note that the Census Bureau and the BLS use different conventions when recording CBSA identification numbers. To join these tables together, you will need to create a new column to join on. In particular, the BLS will record a code a “C1234” corresponding to a Census identifier of “12340” for the same region. To match these up, it is easiest to create a new column in the BLS tables which transforms values like “C1234” into “12340”-formatted values.

The following code may help:

_BLS TABLE_ <- _BLS TABLE_ |> mutate(_NEW COLUMN_ = code_changing(_BLS CBSA ID_)) inner_join(_CENSUS TABLE_, _BLS TABLE_, join_by(CBSA == _NEW COLUMN_))You will need to insert appropriate table and column names replacing the names surrounded by underscores to adapt this for your own use. You will also need to replace

code_changingwith an actual set of function calls.There are several ways to do this, but the most straightforward is probably a combination of:

as.doublestr_removepaste0

To determine the right order to use these, define a variable

x <- "C1234"and figure out the sequence of function calls that satisfies:func1(func2(func3(x, argument?), argument?), argument?) == 12340Here, substitute

func1,func2,func3, with some combination of the commands noted above and supply additional arguments where needed.Hint: Putting

filterstatements beforejoinstatements (when possible) typically leads to faster code. What fraction of total wages in the NYC CBSA was earned by people employed in the finance and insurance industries (NAICS code 52)? In what year did this fraction peak?

Using the functions of the ggplot2 package, create suitable visualizations for each of the following relationships.

The relationship between monthly rent and average household income per CBSA in 2009.

The relationship between total employment and total employment in the health care and social services sector (NAICS 62) across different CBSAs. Design your visualization so that it is possible to see the evolution of this relationship over time.

-

The evolution of average household size over time. Use different lines to represent different CBSAs.

See Extra Credit Opportunity #02 below for ways to make this visualization particularly compelling.

For each plot, make sure your final visualization is “publication-ready” and equipped with, at a minimum:

- Proper \(x\)- and \(y\)-axis labels

- A meaningful title

- Proper units for axis ticks (if appropriate)

- Proper legend titles (if appropriate)

- Proper font sizes so that all text is legible.

Building Indices of Housing Affordability and Housing Stock Growth

Now that you hopefully have a sense of the structure of this data, it is time to begin our analysis in earnest. We will begin by constructing an initial metric of rent affordability by combining our INCOME, RENT, and POPULATION tables from above. Using a suitable join, merge these three into a single table which can be used to perform the following task.

Join together the INCOME and RENT tables. Using this data, construct a suitable measure of rent burden: that is, how much of income a typical resident spends on housing. You should take standard ratio like rent-to-income as a baseline, but modify this ratio to increase interpretability:

-

Standardization: Define a baseline value around which your metric is centered. Some possible baseline structures may include:

- Setting 0, 50, or 100 to the long-term national average

- Setting 0, 50, or 100 to the national average in the first year of your study

- Setting 0 to the lowest value and 100 to the highest value in the study

-

Scaling and transformation: Standardize your metric to increase interpretability. Some standardizations may include:

- Setting 0 to the lowest value, 100 to the highest value, and linearly scaling in between

- Dividing by the standard deviation so that values can be interpreted as “\(k\) standard deviations above average”

- Dividing by the baseline value so that values can be interpreted as “\(k\) times baseline”

Once you have created your metric, create (at least) two tables to introduce it to your readers and visualize them using the DT package from Mini-Project #01:

- Pick a single Metropolitan Area and see how rent burden has changed over time

- Highlight the Metro Areas highest and lowest with the highest and lowest rent burden

Note that your choice of baselining and standardization do not need to be made independently: the best standardization may depend on the baseline used and vice versa. Note that your standardization may also include non-linear transformations (like a log transform) if you find this useful in creating an easily interpreted measure.

Now that we have decided on a way of computing rent pressure, we next want to the ‘building-friendliness’ of various metropolitan areas. In particular, we want to identify CBSAs that permit a large number of new housing units relative to their existing population. Even more than that, we want to identify CBSAs where the total number of housing units permitted is growing faster than the population, as these have the greatest chance of being highly affordable.

Join together the POPULATION and PERMITS tables. Using this data, construct a suitable measure of housing growth: that is, how many new housing units are permitted in a CBSA, relative to both the current number of residents and the overall population growth of that CBSA. Because this metric takes into account growth patterns, it should depend on a fixed lookback-window of 5 years used to estimate population growth.

Before constructing your metric, use dplyr functionality to calculate population growth within each CBSA over a rolling 5 year window. Since your data begins in 2009, your five-year estimates of population growth will start in 2014. The lag function may be useful here.

Construct your metric in two parts:

- An ‘instantaneous’ measure of housing growth that depends on the absolute population of a CBSA and the number of new housing units permitted that year.

- A ‘rate-based’ measure of housing growth that compares the number of housing permits to the population growth over a 5 year lookback window.

For each of these, suitably standardize and baseline your metric. You may choose to use housing permits and population on their own, construct ratios, or other transformations necessary to construct suitable metrics.

Once you have developed the two individual metrics, construct two tables identifying the CBSAs that score particularly high or low on each metric.

Finally, develop a composite score that combines these two metrics. This may be a sum, weighted sum, maximum, minimum, or any other combination function you feel works best.2 As before, identify CBSAs that do particularly well and particularly poorly on your metric.

Because homebuilding is a slow process, it may be worth aggregating over years. You can use the cummean-family of functions from dplyr to compute cumulative statistics or the roll_* functions from the RcppRoll package to get a rolling estimate, e.g., a rolling 5-year average.

Note that both of these tasks, though especially Task 3 allow for significant subjectivity in constructing your metrics. There is no one true way to measure these type of complex dynamics: you might care more about providing housing for existing residents than new residents, or, as the leader of a town with dwindling population, it might be more important to you to have a rich housing stock to attract new residents. Examining which CBSAs score particularly well or particularly poorly on various metrics will help you check that your metrics are actually capturing what you intend for them to capture.

Create (at least) two visualizations to investigate the relationships between your Rent Burden and Housing Growth metrics. Using these plots, identify the most “YIMBY” CBSAs as ones which:

- had relatively high rent burden in the early part of the study period;

- have had a decrease in rent burden over the study period;

- have had population growth over the study period; and

- have had above-average housing growth during the study period.

A CBSA exhibiting all of these qualities is (arguably) an example of YIMBY success and is not a city in decline, as would be indicated by falling population resulting in lower rents.

Policy Brief

You are a political lobbyist for a national YIMBY organization, trying to get Congress to establish a federal program to encourage local municipalities to adopt a more-YIMBY set of housing policies. In order to get your legislation passed, you first need to identify a pair of representatives to sponsor your bill. You want to find:

- A primary sponsor who represents a city that has had YIMBY success; and

- A co-sponsor who represents a more NIMBY city, that is one with high rent and low housing development.

Once you have identified your target representatives, you also want to help them find support from labor unions or industry trade organizations who will help get your bill passed. To do so, identify two important occupations and argue how your bill will benefit voters in those occupations. In particular, you want to find occupations that:

- Have a non-trivial number of employees in each city. (It does little good to lobby NYC on the basis that a policy will be good for cattle ranchers; it’s far more useful to argue that a given policy will benefit firefighters to get the support of FDNY lobbyists.)

- Argue why your policies seem to be favorable for the interest groups, either by arguing that members of those interest groups may spend less on rent (comparing your ‘good’ and ‘bad’ cities) or that their income may rise (if folks in general spend less on rent, that’s good for musician income since folks will have more money to do to live concerts).

Finally, your potential sponsors will want to understand what metrics the government can use to identify ‘good’ (high-YIMBY) cities and to target funding appropriately. Provide a brief non-technical description of your rent burden and housing growth metrics.

Put all of this information together into a “policy brief” (one page non-technical summary document) that can be shared with your most likely bill sponsors. Note that this document needs to be brief and easy to read, covering only the highlights and giving the sponsors the information they need to:

- Explain why their hometown needs this bill to jump-start growth and/or would be likely to receive grants to fund continued growth if this bill passes;

- Rally local supporters from politically powerful groups in support of your bill; and

- Explain the metrics you recommend so that their legal staff can start translating from math into lawyer-speak.

Write up a policy brief covering the key points above. You want your brief to be well-researched, so you can provide supporting evidence if requested, but short enough that you can use it as an “elevator pitch.” Be sure to note the hometowns of your proposed congressional sponsors. (You do not need to find the names of actual politicians: saying “a sponsor from Houston and from New York” will suffice.)

See Extra Credit Opportunity #03 for an additional way to make your lobbying efforts more likely to succeed.

Extra Credit Opportunities

In addition to the extra credit possibilities listed in the rubric above, there are three Extra Credit Opportunities where extra points can be awarded for specific additional tasks. The amount of the extra credit is typically not proportional to the work required to complete these tasks, but I provide these for students who want to dive deeper into this project and develop additional data analysis skills not covered in the main part of this mini-project.

For this mini-project, no more than 5 total points of extra credit may be awarded.

Extra Credit Opportunity #01: Relationship Diagram

Possible Extra Credit: Up to 2 Points

After completing Task 01, examine the structure of your data sets and create a suitable data relationship diagram. An data relationship diagram is a visual representation of:

- The data tables available for an analysis

- The columns (names and types) present in each table

- The possible

joinstructures that can be used to combine these tables.

Generate a suitable visualization and include it in your final submission. Tools like https://dbdiagram.io can be useful for creating this visualization, but you may ultimately use whatever tool is most convenient for you (even a photo of an on-paper sketch). In determining the amount of extra credit awarded, evaluators will look at both the aesthetics of the visualization and the accuracy of the depicted relationships.

Note that this data is not ‘fully normalized’ so there may be cross-table relationships between one or more columns, particularly when connecting a ‘wide’ and ‘long’ data set. Note also that some of the join keys are a bit more complex than typical of a standard database set up so you may wish to annotate when a relationship more complex than simple quality is required.

Extra Credit Opportunity #02: Highlighting Important Units in a Spaghetti Plot

Possible Extra Credit: Up to 1 Point

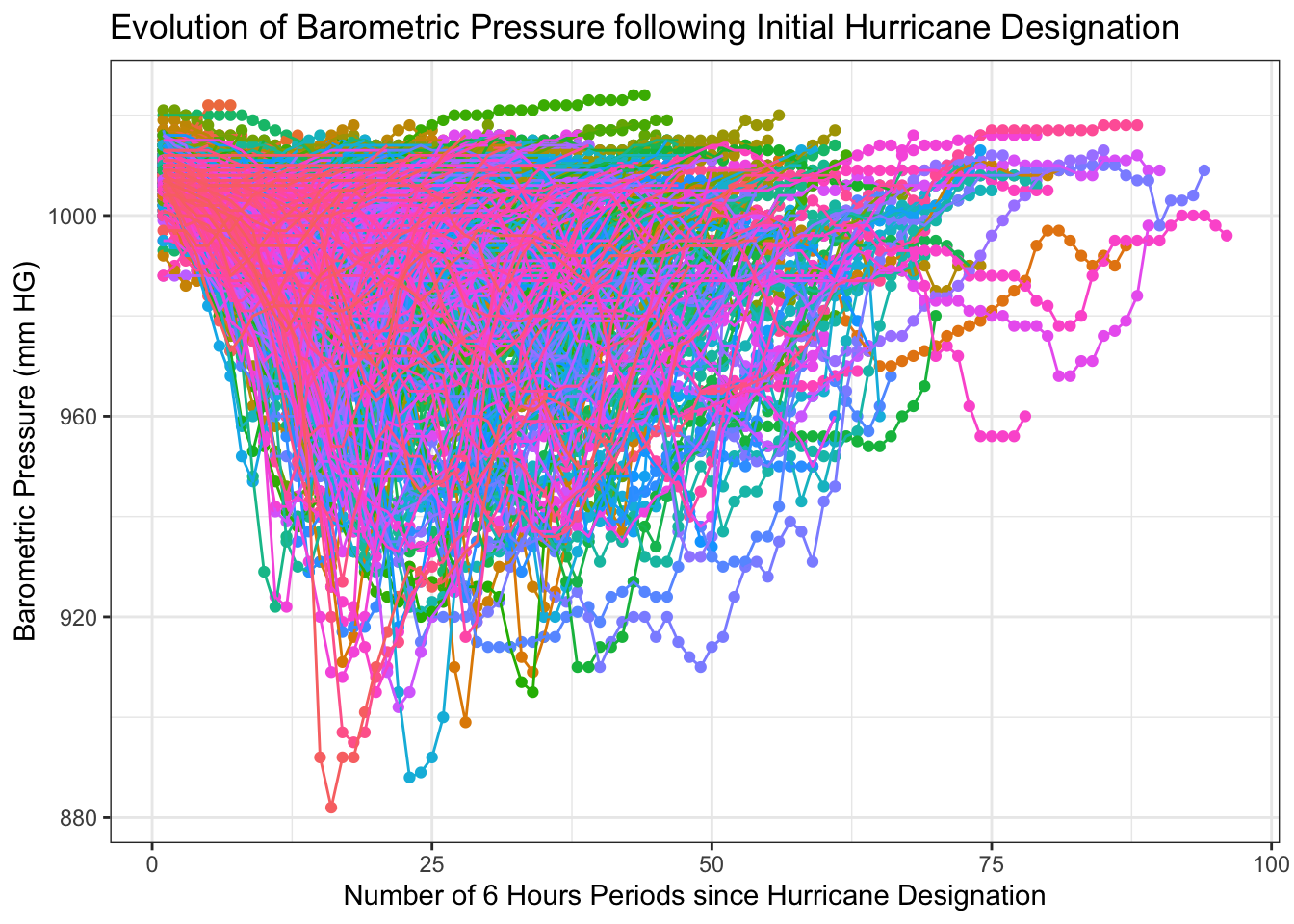

When visualizing the evolution of many units over time, overlapping line plots often become quite difficult to read, resulting in spaghetti plots. E.g.,

Code

storms |>

mutate(storm_name = paste0(name, " (", year, ")")) |>

group_by(storm_name) |>

mutate(observation = row_number()) |>

ggplot(aes(x=observation, y=pressure, color=storm_name)) +

geom_point() +

geom_line() +

guides(color="none") +

xlab("Number of 6 Hours Periods since Hurricane Designation") +

ylab("Barometric Pressure (mm HG)") +

theme_bw() +

ggtitle("Evolution of Barometric Pressure following Initial Hurricane Designation")

Even after removing the legend, this plot is still quite dysfunctional. With this many lines, it is essentially impossible to design a color palette in a way that lets a reader distinguish all different lines. A useful strategy is to instead highlight a small number of important lines, ‘low-lighting’ the other lines so that they only serve as a background of ‘representative samples’. The gghighlight package provides tools for making this sort of plot.

For a point of extra credit, use the gghighlight package to highlight the NYC and Los Angeles CBSAs in your visualization of household size over time. Note that you may need to create a new variable indicating whether a certain row should be highlighted or not.

Extra Credit Opportunity #03: Increasing Millenial Appeal

Possible Extra Credit: Up to 2 Points

Modify your analysis and policy brief to include at least one variable that can be reasonably be interpreted as “something appealing to young people.” Politicians and local leaders always want to attract younger citizens, so if your metric includes something that specifically factors in millenial appeal, you may have a better chance of getting it through congress. You might consider things like “number of folks working in the arts”, “number of persons in a certain age bracket”, “number of folks of a certain race (or mixed race)”, “number of families with young children”, or similar. Be creative here!

The ACS has many variables and it can often be somewhat difficult to find them. If you run the command

tidycensus::load_variables(2024, "acs1") |> View()R will bring up a list of all census tables by their ID and brief description. You can look through this list to find variables that might work for this extension. Commands like:

library(tidyverse)

x <- coll("THING TO LOOK FOR", ignore_case = TRUE)

tidycensus::load_variables(2024, "acs1") |>

filter(str_detect(label, x) | str_detect(concept, x))will look for variables with a certain term in their description; e.g.

library(tidyverse)

x <- coll("kitchen", ignore_case = TRUE)

tidycensus::load_variables(2024, "acs1") |>

filter(str_detect(label, x) | str_detect(concept, x))# A tibble: 23 × 3

name label concept

<chr> <chr> <chr>

1 B25051_001 Estimate!!Total: Kitche…

2 B25051_002 Estimate!!Total:!!Complete kitchen facilities Kitche…

3 B25051_003 Estimate!!Total:!!Lacking complete kitchen facilities Kitche…

4 B25052_001 Estimate!!Total: Kitche…

5 B25052_002 Estimate!!Total:!!Complete kitchen facilities Kitche…

6 B25052_003 Estimate!!Total:!!Lacking complete kitchen facilities Kitche…

7 B25053_001 Estimate!!Total: Tenure…

8 B25053_002 Estimate!!Total:!!Owner occupied: Tenure…

9 B25053_003 Estimate!!Total:!!Owner occupied:!!Complete kitchen facil… Tenure…

10 B25053_004 Estimate!!Total:!!Owner occupied:!!Lacking complete kitch… Tenure…

# ℹ 13 more rowsGoogle is also helpful in finding relevant tables. Note that occupation information can be found in both Census tables and in the BLS data we already downloaded. In general, the BLS data is easier to use.

This work ©2025 by Michael Weylandt is licensed under a Creative Commons BY-NC-SA 4.0 license.

Footnotes

Throughout this section, replace

<GITHUB_ID>with your GitHub ID from Mini-Project #00, making sure to remove the angle brackets. Note that the automated course infrastructure will be looking for precise formatting, so follow these instructions closely.↩︎If you want to compute the maximum or minimum across two columns in

dplyr, use thepmaxorpmin(pairwise maximum/minimum) functions. The “collapsing”minandmaxfunctions will not work for this purpose.↩︎